#33 Software 3.0 and Implications

Plus: Learning Resources Worth Your Time, Can Money By Superintelligence, and More

Dear Readers,

In early June, I spoke on a panel on “How AI is Transforming Alternative Asset Management’ in San Francisco, hosted by BootstrapLabs, an Ares company. Investors and startups leverage on AI to push the boundaries of what can be considered as investable assets and to reach better decisions faster. Each speaker was solving a different piece of the puzzle—alternative data, embedded finance, supply chain digitization, private credit underwriting at scale —but the goal was identical: operationalizing human judgment with AI instead of replacing it.

In this edition, I cover:

Notable Developments

Can Money Buy Superintelligence? Zuckerberg Thinks It’s Worth a Try.

SEC Rule Changes Might Accelerate AI Adoption in Asset Management

BlackRock Deploys AI Within Fundamental Equity Research

Tech Investor Coatue’s Fantastic 40 List Notably Omits Google

Software 3.0 and Implications

Directors’ Corner: Learning Resources Worth Your Time

Enjoy.

Can Money Buy Superintelligence? Zuckerberg Thinks It’s Worth a Try.

Meta has just confirmed its $14.3 billion investment in Scale AI and handed the reins of Meta’s new Superintelligence lab to Scale’s 28-year-old founder, Alexandr Wang.

Scale AI’s contractor-labor-intensive data-labeling business model is very different rom Meta’s, suggesting limited value accretion by running it as an affiliated business than as an independent vendor. In addition, this acquisition has already set off a significant shift of orders by OpenAI and other competitors of Meta away from Scale. The real prize for Meta may not be Scale’s business, but its leadership and talent.

This fits a broader pattern. Zuckerberg has activated his ‘founder mode’ and been aggressively pursuing top AI leaders and researchers, sometimes by acquiring or investing in their companies or funds. Before the Scale deal, Meta was in unsuccessful talks to acquire AI search startup Perplexity. Earlier, Meta also attempted to buy Safe Superintelligence and recruit its founder, the renowned AI scientist Ilya Sutskever. When that failed, Zuckerberg pivoted to hiring the startup’s CEO, Daniel Gross, and former GitHub CEO Nat Friedman, by acquiring a stake in the duo’s venture firm. In a recent podcast, Sam Altman criticized that Meta is offering outsized, cash-heavy compensation, even including $100 million sign-on bonus to lure talent from rivals.

Big questions remain: Can these brilliant minds truly align around a shared vision set by Zuckerberg? What will Meta’s new “superintelligence” team actually do? Is this a defensive move to address Meta’s lagging performance with Llama 4 and stem talent attrition, or an offensive push to leapfrog competitors with an urgent, all-out effort?

From a financial perspective, one thing is clear: both CapEx (AI infrastructure) and OpEx (talent acquisition) will rise sharply. Meta’s “efficiency era” may be over.

SEC Rule Changes Might Accelerate AI Adoption in Asset Management

SEC chair Paul Atkins withdrew a number of rules proposed by the last administration, including one that attempted to micro-manage how data analytics and technologies (including AI) is used to give financial advice to investors. This particular rule has been widely perceived to be close to impossible to implement and the withdrawal was anticipated. This also means that proper scope of AI oversight at the fund board level is even more critical in shareholder protection when innovation is unleashed in this area.

BlackRock Deploys AI Within Fundamental Equity Research

BlackRock is now actively deploying artificial intelligence within its fundamental equity research. The firm’s new in-house platform, dubbed “Asimov,” is being integrated into its fundamental investing teams to augment traditional analyst work. Asimov processes company filings, earnings calls, and reports to surface trends and insights that might otherwise be missed. This move is not about replacing analysts but empowering them with AI-driven tools that can digest vast datasets and highlight key investment opportunities or risks. The initiative reflects an industry-wide evolution toward hybrid models that combine advanced technology with deep domain expertise. For board directors, this signals how leading firms are leveraging AI to stay at the forefront of investment intelligence and decision-making.

Tech Investor Coatue’s Fantastic 40 List Notably Omits Google

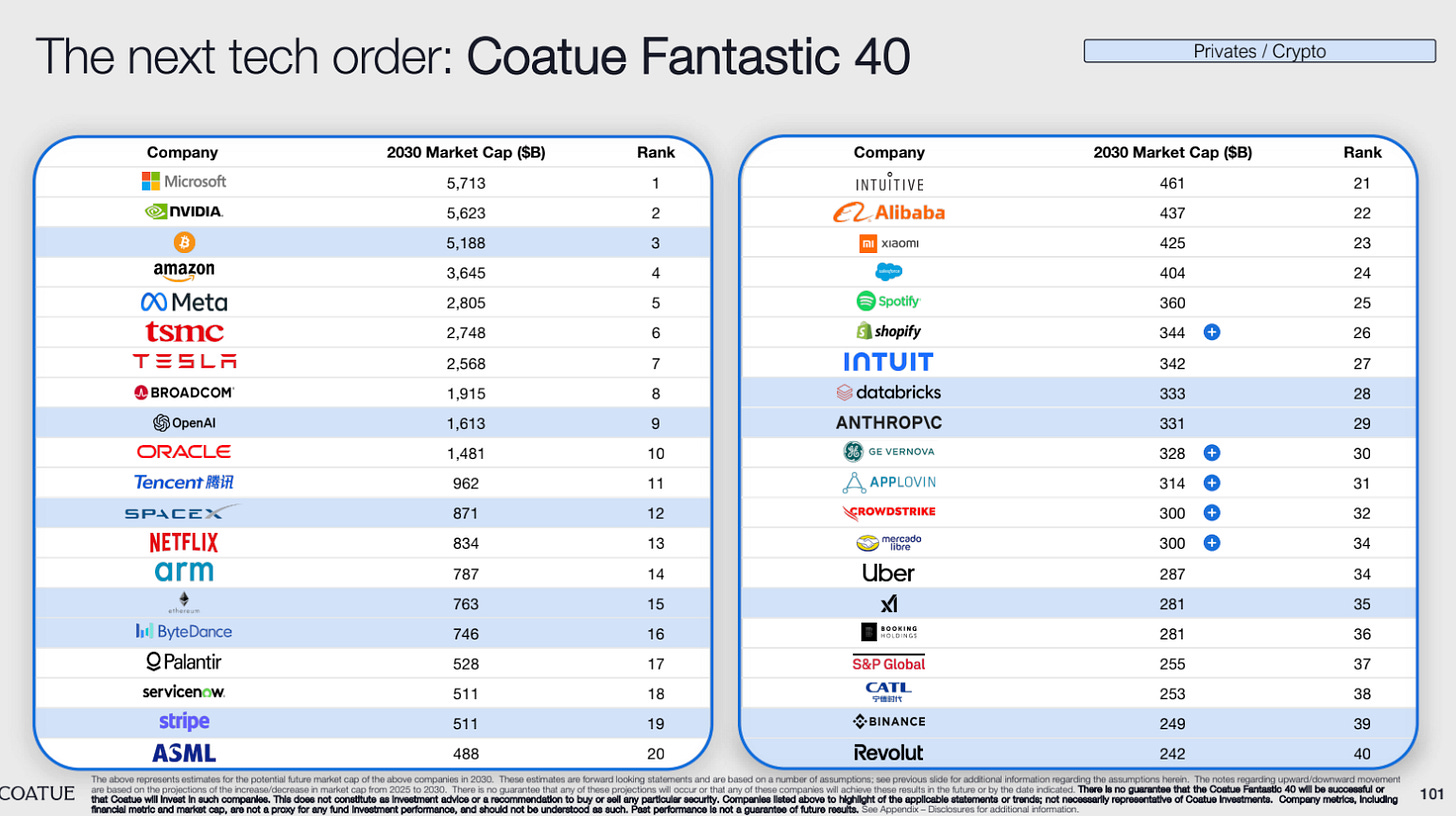

Coatue recently released a 102-page keynote presentation on the evolving technology landscape across public & private companies. Their prediction of 40 biggest companies by 2030 reflect their own book based on investment convictions around AI and crypto. What’s causing more reaction is the disappearance of Google from this list, as Coatue believes that the structural wave of people changing search habits to use AI is hard to reverse. I covered what leaders need to know when the world’s habit of information retrieval is moving from Google search to AI conversations in my last newsletter here.

Software 3.0 and Implications

I just watched Andrej Karpathy's latest keynote at YC Startup School, and I'm still processing everything he shared. His talks always leave me thinking, but this one particularly hit home as he traced software's evolution into what he calls the 'Software 3.0 era. It is defined by the use of LLMs where natural language prompts in English become the primary interface for building and interacting with software, and LLMs function as utilities and/or operating systems. A few things Karpathy shared really stood out:

The Iron Man Analogy:

Rather than replacing humans, AI in Software 3.0 serves to augment human capabilities, like an Iron Man suit that enhances, not replaces, the person inside. AI agents can solve complex problems but also exhibit unpredictable behaviors, such as hallucinations or mixing up information. ‘We have to keep AI on a leash.’Designing for Partial Autonomy:

Karpathy advocates for building applications with “partial autonomy,” where humans remain in the loop to provide oversight. He draws parallels to Tesla’s Autopilot, where autonomy levels can be adjusted, ensuring reliability and safety as systems scale. Another example he used is coding agentic tools such as Cursor, where a user can switch up or down on how autonomous the tool should be.This resonates deeply with my experience. Surprisingly even if humans simply approve proposed plans from AI agents, we still feel a higher level of trust than we let go our options for control. In reality, this partial autonomy helps to tailor solutions for different parts of workflow. For instance, when I built an investment algorithm app, I’d let AI pretty much decide everything on the design choices of interface, while verifying every step in the math calculation.

Vibe Coding and Rapid Prototyping:

Karpathy encourages “vibe coding” (he invented the phrase after all), describing an app’s desired functionality in plain language and letting AI handle the rest. He emphasizes the importance of verification and iteration, as well as shipping early with oversight and scaling later.

As I reflected on Karpathy's insights, three implications for leaders became clear:

Strategic window to capitalize the shift is now

The talk highlights that the opportunity to capitalize on this shift is narrow—perhaps 12–18 months. Organizations must become more fluid and adaptable to keep pace with rapid change, especially as customers and non-technical staff begin to build software themselves.Your growth and customer success strategy have to include AI as your audience

Software 3.0 means that you will increasingly face AI as companion or gate-keeper on your reach to customers. It is never too early to think about what that means for your business.

Your ‘taste’ could be your competitive advantage

I recently rebuilt an investment portfolio optimization application with Claude Code. It took 1/3 of the time it used to, and the experience really made me appreciate more about what we leaders bring to the table:

With the AI models and agents becoming more intelligent and autonomous at 'implementing' by the day, we will allocate our time more and more towards design, evaluation and quality assurance.

We've spent years developing "taste" in our fields. That intuitive sense and judgment of what good looks like, what will work in practice, what feels right or wrong about an approach. This expertise becomes our true competitive advantage.

Everyone's talking about AI upskilling: learning prompting techniques, building applications, mastering new tools. But don't underestimate what you already bring to the table. Think about how to translate that into communicating with AI and helping it build something people really want.

Learning Resources Worth Your Time

Board directors often ask me to recommend AI learning resources, and here are a few recent ones worth your time:

Ethan Mollick's Updated AI Tools Guide

Professor Mollick just refreshed his practical guide on which AI systems to use and how to use them effectively. It's a quick read that cuts through the noise.

Anthropic's New AI Fluency Course

This 12-lesson, self-paced course caught my attention because it focuses on human-AI collaboration rather than just explaining the technology. The framework centers on four key competencies: Delegation, Description, Discernment, and Diligence. What I find valuable is that it teaches you to engage with AI systems effectively regardless of which specific tool you're using. The prompting tactics will change, but the framework is designed to stay relevant. The whole course takes about 3 hours - perfect for busy schedules.

Moving from Individual Learning to Organizational Assessment

While the first two resources focus on personal AI fluency, board directors also need frameworks to evaluate whether their leadership teams are building AI-capable organizations.

Zapier CEO Wade Foster's Real-World AI Fluency Framework offers something I rarely see: actual interview questions and assessment criteria that Zapier is using right now to evaluate AI fluency. Their four-level framework moves beyond "do you use ChatGPT?" to assess genuine AI integration, ranging from "Unacceptable" (resistant to AI tools) to "Transformative" (using AI to rethink strategy and deliver new value). What caught my attention are the specific interview questions they're asking across different roles - for marketing: "How do you use AI to personalize messaging?" For product teams: "Give an example of using AI in a product feature." For leaders evaluating AI readiness in their organizations, this gives you a practical starting point to adapt for your industry and roles, with the refreshing honesty that the specifics will evolve but the framework for assessing strategic AI integration remains solid.

Thanks for reading.

Joyce