#22 Here Come AI Agents

Plus: Fed Survey of AI Home Use, What If Things Go Very Right, AI Use Policy Template for Boards, AI as a Thought Partner, and More

Dear Readers,

I started this newsletter as my way of 'learning in the open' about the complicated, overwhelming, yet transformative world of artificial intelligence in finance, investing and board governance. Instead of trying to cover every piece of AI news, I focus on topics that I believe matter more strategically and have longer impact. Some of what I share here are my views, still evolving as I learn – and I welcome different opinions.

I write from a non-technical perspective, breaking down complex AI concepts to explore their business implications. Surprisingly, I've received wonderful feedback from tech experts who find value in this business lens. Their comments made my day!

The engagement from this community continually delights and motivates me. At two different conferences last week, I was asked "When is your next newsletter coming out?" by people I deeply respect. The opportunity to share this learning journey with you, my dear readers and friends, is such a blessing.

With that encouragement, I'll also try to publish more frequently whenever I can. But no promises!

In this issue, I cover:

Notable developments: Fed survey on AI home use; more companies turn to modular nuclear power plants for AI’s energy need

Move Aside, Copilots; Here Come AI Agents

What If Things Go Very Right with AI?

Directors’ Corner: AI Use Policy - A Critical Tool for Board Oversight

Using AI as a Thought Partner: A Voice-Driven Approach

One More Thing: How do college students prepare when AI takes away some entry level jobs?

Enjoy.

Notable Developments

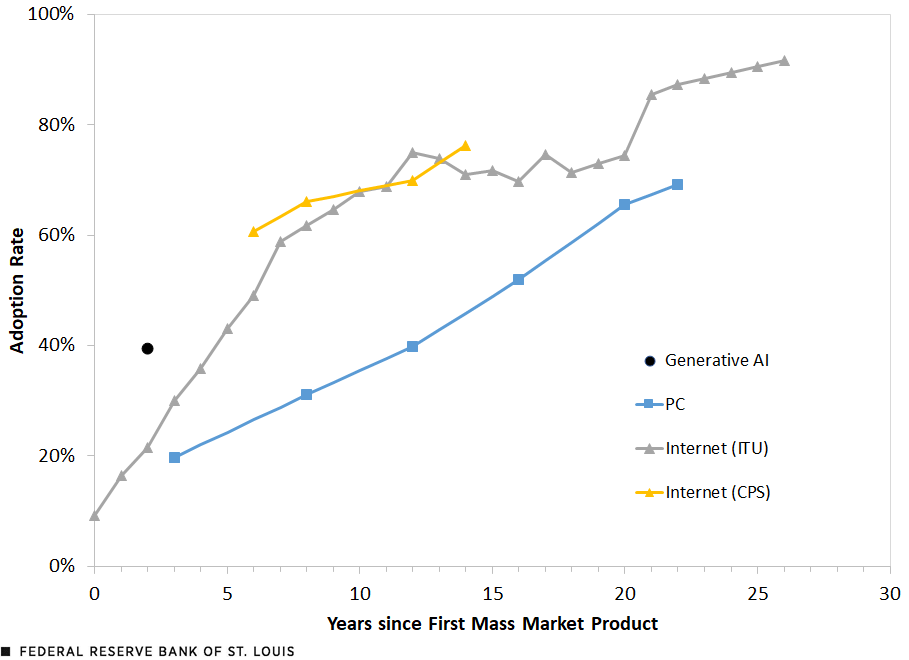

1. Fed Survey Shows Faster Adoption of AI than Internet

Federal Reserve Bank of St. Louis conducted the first nationally representative U.S. survey of generative AI adoption at work and at home. Almost 40% of the U.S. population ages 18 to 64 used generative AI to some degree. The adoption is much faster than internet or PC in their beginning years.

The nationwide survey follows the same timing and structure as the Current Population Survey (CPS), the monthly labor force survey conducted by the U.S. Census Bureau for the Bureau of Labor Statistics. For this reason, the survey is particularly interesting because it goes beyond business leaders or people in certain industries, typical of other AI adoption surveys.

2. Even More AI Companies Turn to Nuclear Power

After Microsoft, more AI companies are turning to nuclear power to meet the surging energy demands of their data centers and AI operations. Amazon has invested $500 million in developing small modular reactors and signed agreements with Energy Northwest and Dominion Energy. Google has partnered with Kairos Power to purchase nuclear energy from small modular reactors. These tech giants are betting on nuclear power as a scalable, carbon-free energy source to fuel their AI ambitions.

Move Aside, Copilots; Here Come AI Agents

Back in February, I wrote about AI's agentic future in this newsletter, arguing that chatbots were far from sufficient for handling real business workflows. Eight months later, this shift is happening faster than I anticipated. At San Francisco Tech Week this month, AI agents dominated the conversation - and for good reason. Just a few days ago, Anthropic released the “Computer Use” feature that acts like your assistant completing multi-task workflows. The demo below is well worth your time.

Sequoia Capital recently published a compelling analysis titled "Generative AI's Act 01: The Agentic Reasoning Era Begins." Their key insight is that the agentic future is closer as AI is learning to think, not just respond. When OpenAI released their latest model (known as o1 or Strawberry), they demonstrated something remarkable: AI that can "stop and think" before responding. Think of it like the difference between a quick-witted intern who can immediately answer questions versus a thoughtful analyst who takes time to reason through complex problems.

According to Sequoia, this capability is transforming how AI delivers value. Instead of selling software licenses, companies are now selling work outcomes. Take Sierra, an AI customer service agent - they charge per resolved customer issue. Or Factory's "droids" that handle end-to-end software engineering tasks just as a human engineer would.

When cloud computing emerged, traditional software companies insisted they could simply deliver their existing products over the internet. They missed that cloud wasn't just a delivery mechanism - it required fundamentally rethinking how software was built, sold, and supported. I'm seeing the same pattern with AI agents.

For boards and business leaders, this marks a shift from basic automation to true workflow transformation. We're not just automating discrete tasks - we're looking at AI systems that can handle complex, multi-step workflows across legal, customer service, software development, and other knowledge work domains. As Sequoia emphasizes, the market opportunity isn't the $350B software market - it's the multi-trillion dollar services market.

Leading AI companies - OpenAI, Anthropic, Google, and Meta - are now providing developers with direct access to their agentic capabilities. A recent a16z analysis suggests 47-56% of all work tasks could be enhanced by AI-powered agents.

Forward-thinking companies are already piloting these AI agents in controlled environments, starting with "copilot" mode where AI assists human workers, then gradually transitioning to more autonomous operation as reliability is proven.

As always the case with AI, the question isn't whether AI agents will impact your business and investments - it's how quickly you'll adapt when they do.

What If Things Go Very Right with AI?

We often talk about AI shortcomings, risks and the consequences of hasty adoptions. But what if things go remarkably right? What if we achieve AI systems that can match or exceed human intelligence across multiple domains, operating 10-100 times faster than we do?

In a long essay "Machines of Loving Grace," Anthropic CEO Dario Amodei envisions what he calls "a country of geniuses in a datacenter" - millions of instances of superintelligent systems working in parallel, each operating at 10-100x human speed.

[Sidebar: Although less known than OpenAI, Anthropic has emerged as a leader in AI development, combining technical innovation with an emphasis on responsible AI. The company's Claude model (my favorite app currently) stands out for its analytical capabilities and nuanced communication, particularly in writing and complex reasoning tasks.]

This compression concept warrants careful consideration. In investing, we project years into the future and discount back to present value. With AI, we may need to radically compress that timeline - imagine experiencing all technological advancement from 1970 to 2020 in just ten years. Not through a sudden "singularity," but through the systematic application of vastly increased intelligence to existing problems.

Understanding where this acceleration happens requires analyzing what Amodei calls "returns to intelligence" alongside five limiting factors: the speed of the outside world, need for data, intrinsic complexity, human constraints, and physical laws.

"Economists often talk about 'factors of production': things like labor, land, and capital... I believe that in the AI age, we should be talking about the marginal returns to intelligence, and trying to figure out what the other factors are that are complementary to intelligence and that become limiting factors when intelligence is very high.” - Dario Amodei, CEO of Anthropic

Consider biology: many breakthrough discoveries come from a tiny number of researchers making unexpected connections. High returns to intelligence here suggest we might see ten CRISPRs and fifty CAR-T therapies in a decade - essentially compressing the entire 21st century's expected biological progress into its first third. However, some constraints remain fixed: cells grow at a certain speed, clinical trials take time, and physics sets hard limits.

The economic implications unfold in stages. Initially, comparative advantage keeps humans relevant and potentially more productive as AI complements human work. Long-term, as AI becomes broadly effective and cheap, our economic structure may need fundamental rethinking. This pattern isn't unprecedented - civilization has navigated transitions from hunter-gathering to farming to industrialization. Each time, society adapted in ways that would have been hard to imagine beforehand.

For business leaders, this suggests different questions about AI.

Instead of asking "how can AI improve our current processes?" we might ask "where in our industry do returns to intelligence compound, and what are our real constraints?" Some sectors might see decades of progress in months, while others advance only as fast as their physical or human constraints allow.

The competitive implications extend beyond technology. Organizations that understand and plan for this compressed timeline - while realistically accounting for real-world constraints - may find themselves better positioned. This isn't about incremental improvement; it's about preparing for fundamental shifts in what's possible.

There's an interesting tension here: while this vision seems radical, it follows naturally from continuing current trends and basic human aspirations for better health, prosperity, and capability. The biggest risk might not be overestimating AI's long-term impact - but underestimating the speed and scale of change once key thresholds are crossed. In times of such potential transformation, maintaining optionality while preparing for acceleration could be the most prudent strategy.

AI Use Policy: A Critical Tool for Board Oversight

While management is responsible for drafting and implementing AI use policies, directors play a crucial role in ensuring these policies align with corporate strategy, risk management, and ethical considerations. A well-crafted AI use policy, developed by management and overseen by the board, helps organizations ensure responsible innovation while managing potential risks. Without such a policy, companies may face ethical breaches, regulatory non-compliance, reputational damage, and legal liabilities.

To assist boards in evaluating management's AI use policies, here are key elements that directors should look for, grouped into four essential categories:

Strategic Alignment and Governance

Clear definition of the policy's purpose and scope

Outline of approved and prohibited AI use cases

Established roles for human oversight and intervention

Ethics and Transparency

Mandated ethical impact assessments

Requirements for explainable AI decision-making processes

Commitment to diverse representation in AI development

Data and Security

Robust data governance guidelines

Defined incident response procedures

Criteria for AI vendor selection and compliance

Implementation and Continuous Improvement

AI literacy programs for staff

Regular audits and KPIs for AI initiatives

Scheduled policy reviews and updates

Boards should expect management to present regular updates on the policy's effectiveness and any necessary revisions. Directors should ask probing questions about how the policy is being implemented, its impact on operations, and any challenges encountered.

Templates to Start With

Here are three resources to get you started:

AI policy template from the SANS Institute

AI policy template for non-for-profit organizations from NFPS.AI

AI Risk Management Framework from NIST

Using AI as a Thought Partner: A Voice-Driven Approach

I like talking to my AI thought partner when I walk my dog, do the laundry or clean the house. We all have different thinking styles, and sometimes 'talking out loud', literally, can help us clarify our own thoughts. AI can be a patient partner and assistant to help us with this brainstorming. I've shared this simple trick with some busy people and they all loved it! So let me also share it here.

It really doesn't matter which AI app you use on your phone, as long as they have a voice mode (typically indicated by a microphone icon at the bottom). I've used ChatGPT, Claude, and Perplexity. Here are the steps:

1. Set the stage

Before starting, decide on a specific role for the AI. This focuses the interaction and sets clear expectations.

Example: "You're my marketing strategist with extensive experience in […]. I need your expertise in digital campaigns."

2. Provide context and instructions

Clearly explain your needs and how you want the AI to respond. You can upload relevant documents or specify whether you’d like AI to search the internet (in the case of Perplexity or ChatGPT). Please make sure your AI use is in compliance with your company’s policy. Do not share sensitive information.

Example: "I'm going to outline a campaign idea. Don't interrupt. After I finish, provide specific feedback on feasibility and potential improvements. "

3. Present your ideas

Speak your thoughts. Depending on your needs, you may request AI to hold off responding until you finish.

Example: "I have a campaign idea that targets millennials using influencer partnerships and interactive social media challenges..."

4. Request targeted feedback

Ask for specific insights or critiques.

Example: "What are the three strongest elements of this plan? Where are the potential weaknesses?"

5. Explore alternatives

Use the AI to broaden your perspective.

Example: "Suggest two alternative approaches that could achieve the same goals."

6. Refine and iterate

Use follow-up questions to hone ideas.

Example: "How could we modify the social media challenge to increase viral potential?"

7. Summarize and document

Ask the AI to recap key points and create a memo.

Example: "Summarize our discussion and draft a brief memo."

Experiment a few times and you'll find the approach that works best for you.

While we are on the topic of thinking, I’d also share this interesting article on Every.to titled “Five New Thinking Styles for Working With Thinking Machines”.

"In Software 2.0, problems of science—which is about formal theories and rules—become problems of engineering, which is about accomplishing an outcome. This shift—from science to engineering—will have a massive impact on how we think about solving problems, and how we understand the world."

One More Thing

I am sometimes invited to speak to college and business school students on 'AI in Finance'. We typically covered corporate finance, capital markets, investment management, and M&As.

However, as AI is commoditizing some entry-level job skills, managers are increasingly valuing qualities like curiosity, storytelling, critical thinking, and cross-disciplinary expertise. Meanwhile, the traditional recruiting process is not set up to identify these qualities early in the job application process.

What advice would you offer to college graduates with business / finance major entering this exhilarating yet uncertain job market? How can they best prepare for the AI-driven future?

If you find this newsletter valuable, please share it with your network. I greatly appreciate it.

Thank you.

Joyce Li