This Week in AI: Agentic AI, Gemini, ChatGPT Penalty, Regulatory Spotlight on AI, AI Tools, and More

👋 Hi! This is Joyce Li. Welcome to the second issue of "AI Simplified for Leaders," your weekly digest aimed at unraveling the complexities of artificial intelligence for business leaders, board members, and investors.

This week, I talk about AI Agents, my initial impression of Gemini, ChatGPT Penalty in talent evaluation, the regulatory spotlight on AI, and some tools for podcast note-taking. Past issues can be found here.

The Agentic AI Future

In a week marked by Sam Altman's ambitious plan to raise up to $7 trillion for AI chip development, a subtler yet significant shift is unfolding within the AI landscape. According to a report by The Information, OpenAI has been working on developing AI agents that can automate complex tasks. These sophisticated programs, designed to perform tasks autonomously, mark the dawn of a new automation era, promising to surpass traditional chatbots and software tools in capability.

AI agents are being tailored to function both on user devices and across the web. They are engineered to execute a diverse array of tasks — from migrating data between spreadsheets and documents to orchestrating travel plans and securing flight bookings — all possibly without human oversight. This advancement addresses the limitations of current chatbot applications in business workflows, which, despite their proficiency in generating human-like text, are inadequate for tasks necessitating complex reasoning, decision-making, and execution.

Nonetheless, the path to fully autonomous AI agents is fraught with hurdles. A significant challenge is AI hallucination, a phenomenon where models confidently produce factually incorrect information. This issue becomes critical in applications demanding legal compliance and precision, highlighting the need for advanced mechanisms to verify the reliability and accuracy of agent outputs.

The deployment of AI agents also prompts crucial questions regarding rights, permissions, observability, and risk management. To ensure these agents adhere to ethical and legal standards, robust oversight is imperative. This includes auditing capabilities to review an agent's actions and decisions, ensuring accountability and transparency.

Megan Ma, Asst. Director of Codex and John Nay, Codex Affiliate and Founder & CEO of Norm.ai address these challenges in their recent article “A Supervisory AI Agent Approach to Responsible Use of GenAI in the Legal Profession”, published on the website of Stanford Law School. The article underscores the importance of supervisory AI agents in maintaining compliance with legal and ethical norms, particularly in the legal sector where accuracy and regulatory adherence are crucial. The discussion extends to the potential of supervisory AI agents in unlocking safe AI deployment in sensitive domains by providing verification, assurance, and oversight mechanisms.

AI agents' promise lies in their ability to automate reasoning and tool selection. Utilizing large language models (LLMs) and techniques like web search and Retrieval-Augmented Generation (RAG) for dynamic planning, they can significantly boost efficiency and decision-making across various sectors, from legal to business operations.

However, the integration of AI agents is not devoid of challenges. Concerns over increased costs, latency, and issues surrounding control and accountability loom large. To foster responsible AI agent usage, observability mechanisms are crucial, enabling stakeholders to trace an agent's actions. Additionally, escalation controls for human review and approval of actions are essential.

The transition towards AI agents heralds a promising yet complex phase in artificial intelligence's evolution, offering the potential to automate and enhance decision-making processes across numerous fields. Yet, this shift also brings to light the intricacies of embedding AI into complex human endeavors. Addressing these intricacies, ensuring AI agents' lawfulness, ethics, and reliability, is paramount for their successful implementation and societal acceptance. For business leaders, navigating this new wave of AI innovation demands a balanced approach, emphasizing not just technological advancements but also the ethical and legal frameworks that enable these agents to function effectively and responsibly in the real world.

Gemini Ultra and Advanced - Teething

On February 8, Google unveiled Gemini Advanced, powered by the cutting-edge Gemini Ultra 1.0 AI model, marking a significant evolution from its predecessor, Bard, now rebranded as Gemini (free).

This launch introduces a more sophisticated AI with future integration of Google’s productivity tools and cloud services. However, my early experiences with Gemini Advanced reveal some initial challenges, including the AI's reluctance to answer questions or generate images on some common topics, and difficulties integrating web search links into responses. Additionally, I cannot find an easy way to opt out of permitting my conversations to be used to train the AI models.

On the other hand, the bundling of Gemini Advanced with Google's cloud storage for a monthly fee similar to ChatGPT Plus presents an intriguing value proposition.

The ChatGPT Penalty: Should We Avoid It in Modern Talent Assessment?

A board director I admire at a prominent nonprofit confided in her decision to discard applications suspected of being written by ChatGPT. I urged a second thought.

The director’s stance reflects a recent trend of talent and content evaluation in many other domains. Lizzie Wolkovich, a respected researcher, had her paper rejected due to her writing style, which a reviewer claimed was 'unusual'—suspecting AI assistance. Despite her transparent methodology and the absence of AI in her work, the 'ChatGPT Penalty' casts an unjustifiably long shadow on her scholarly integrity.

This trend of AI penalty in talent evaluation alarms me.

First and foremost, research shows that AI detection, whether by humans or algorithms, is far from foolproof. False positives are rampant. OpenAI itself cautions against the fallibility of such tools, noting even Shakespeare's works could be mis-flagged. AI expert Professor Ethan Mollick echoes these doubts about AI detectors' reliability.

Moreover, Stanford's Human-Centered AI research indicates non-native English speakers are more likely to be falsely caught by these detectors. I am an immigrant and deeply resonate with this finding. The noble goal of fairness could paradoxically foster bias, inadvertently disqualifying the very talent we seek to attract.

As a team leader and former nominating chair on boards, I champion clear guidelines, not hasty judgments. We must assess the content's essence, regardless of its presumed origin. In cases where an AI ban is necessary, it should be stated clearly, and possibly enforced through real-time communications or progress-tracking tools. In most instances, a 'ChatGPT-blind' approach might be the path forward.

Director’s Corner: Regulatory Spotlight on AI + Investment

With the SEC, NASAA, and FINRA's recent alert on Jan 25, 2024, the spotlight is firmly on artificial intelligence (AI) in the investment realm. Highlighting the dual-edged nature of AI—its capacity for innovation against the backdrop of investment fraud concerns—this call to action is clear. As AI weaves deeper into the fabric of investment strategies, its allure brings regulatory scrutiny into sharp focus, emphasizing the crucial balance between opportunity and oversight.

Navigating AI with Governance and Ethics

For board directors, the path forward involves a strategic dialogue with investment fund managers. The integration of AI should not only break new ground but also align seamlessly with regulatory standards and ethical practices. Key areas of focus include:

Regulatory and Ethical Compliance: Ensuring AI applications meet the high bar of current regulations and ethical standards.

Data Integrity: Implementing robust safeguards for the accuracy and integrity of data underpinning AI systems.

Risk Mitigation: Actively addressing AI-associated risks, from market manipulation to unfair trading practices.

Investor Transparency: Maintaining clear communication with investors about AI's role and impact on strategies.

The Regulatory Lens: Protecting Consumers and Markets

The broader regulatory landscape, most recently illustrated by the FCC's ban on AI-voice robocalls on Feb 8, underscores a commitment to consumer protection and responsible AI usage in finance. This environment of regulatory vigilance provides a backdrop for board directors, guiding their decision-making in AI adoption and oversight.

Steering Through AI's Regulatory Seas

The urgency underscored by the regulatory alert necessitates a proactive, informed approach from board directors. Cultivating a compliance-focused, transparent, and ethically driven culture is essential. This approach not only aligns with investor and regulatory expectations but also positions organizations to capitalize on AI's benefits while navigating its challenges.

Hear, Hear, Scribble There: AI Tools for Podcast Note-Taking

Imagine this: You're amidst your daily routine, whether it's a serene walk or a bustling commute, and a podcast episode strikes a chord. The desire to capture that fleeting insight is a familiar pang to podcast enthusiasts everywhere. Yet, how often have you found yourself later, pen in hand, struggling to recapture that spark? Fear not, here are some AI tools to help podcast note-taking and quote capturing.

AI Q&A on Transcripts:

When the digital script of your podcast is available on its website (for instance, Acquired provides their podcast episodes) or a podcast platform like Spotify, you can save the transcript to a file and upload it to Claude, ChatGPT, or other AI chatbots to do Q&A with the script. A simple prompt like "Identify and extract the top five most impactful quotes from this transcript"can work.

Streamlined AI Summarization & Capture on YouTube:

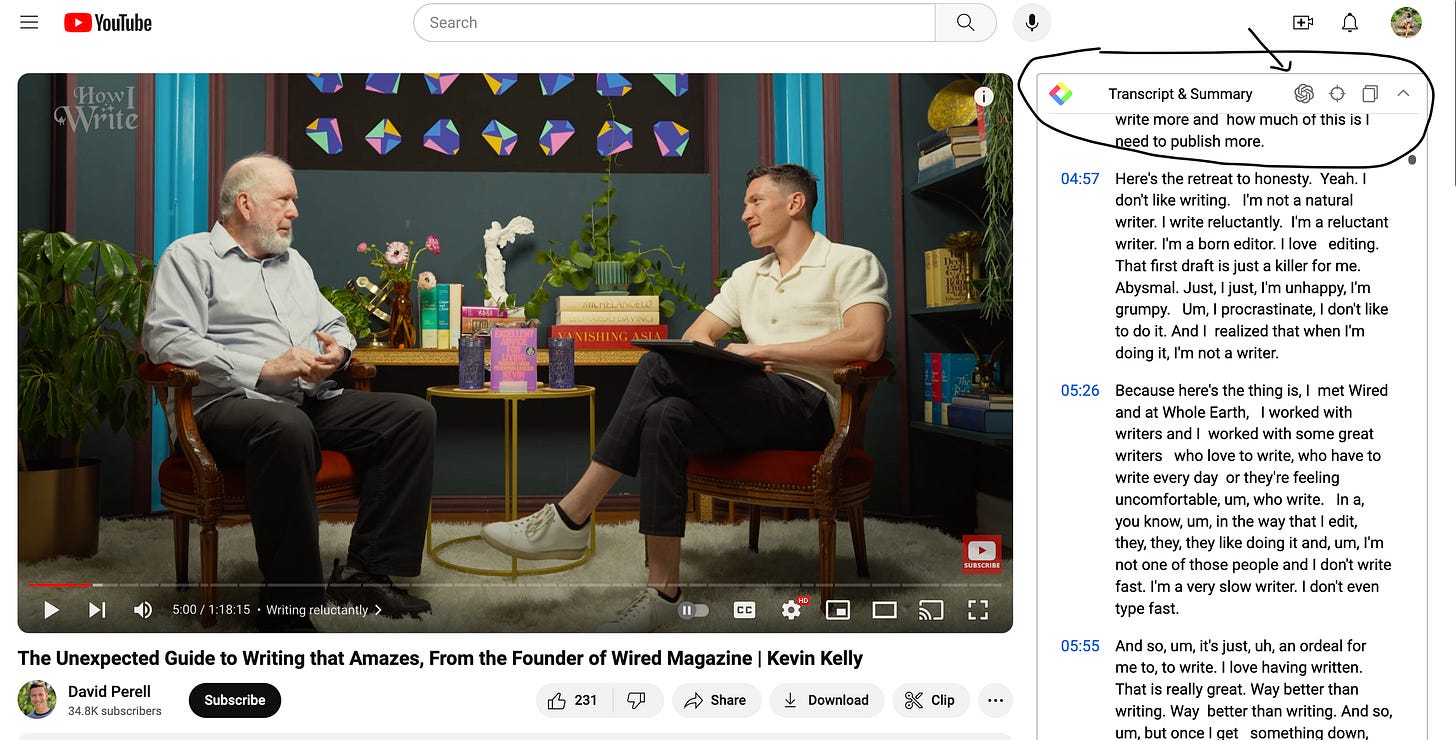

For those podcasts with a video version on YouTube, I use the Glasp Chrome Plugin (see screenshot below) to find or summarize key points. It can be linked to ChatGPT or other AI tools with one click on your browser.

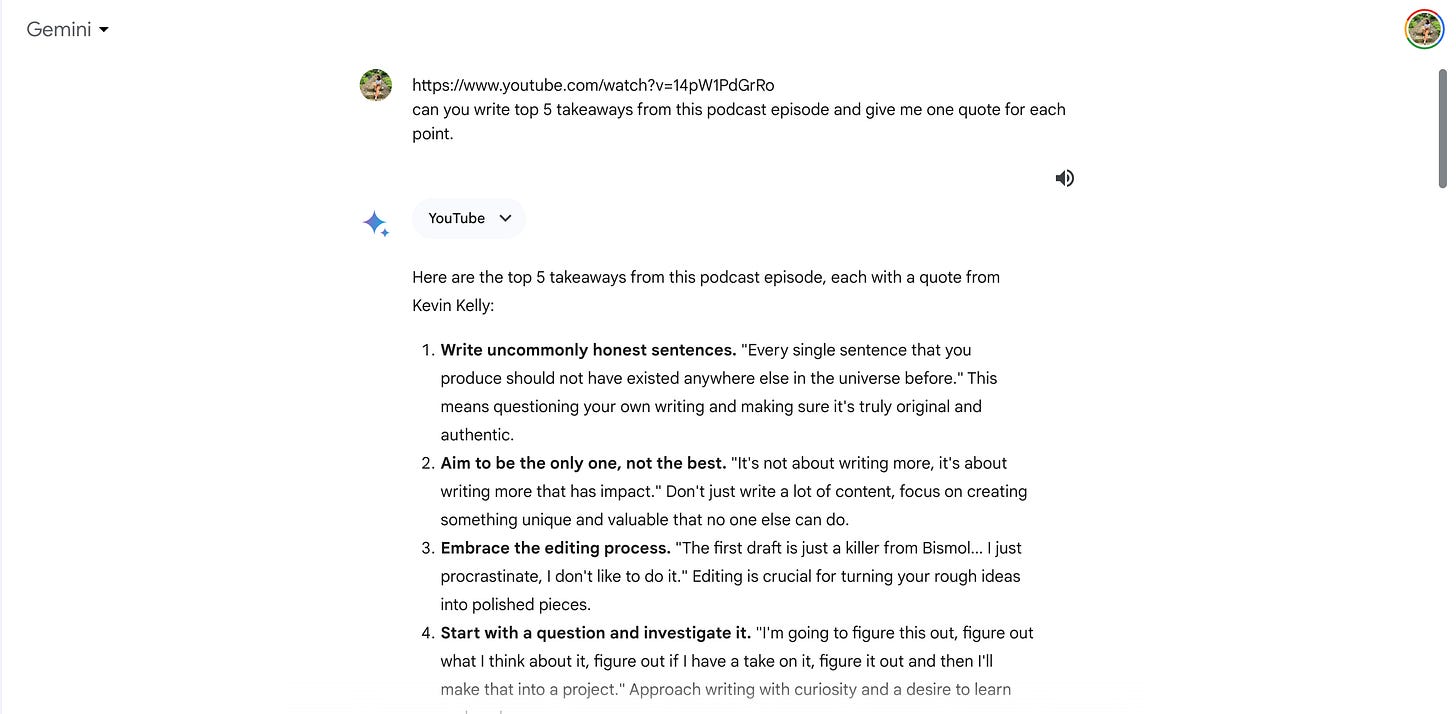

Alternatively, you can use the free version of Google Gemini (formerly Bard). Simply feed it the YouTube link and ask away!

Personalized Notes with Snipd:

For a more hands-on approach to your podcast listening experience, Snipd allows you to capture your personalized notes in real time. While listening, a simple tap on your headphones bookmarks your favorite moments. Snipd then compiles these highlights into an email, delivering a concise list of the main takeaways directly to your inbox. It's worth noting that Snipd comes with a free version, although it may not support all podcasts.

Thank You

I hope this issue gives you some actionable ideas and some AI topics to consider. Your feedback and questions are invaluable while I am testing and adjusting the content and format. Please share your insights, use cases, or challenges with me at joyce@averanda-ai.com. Let’s get AI fluent together.

I am going to try out Glasp thanks for the rec!