#13 How to Reduce AI Hallucination: RAG and Knowledge Graph 101

Plus: OpenAI GPT4o, AI at Work report, AI and healthcare inequalities, and more

👋 Welcome back to "AI Simplified for Leaders," your free weekly digest aimed at making sense of artificial intelligence for business leaders, board members, and investors. I invite you to explore the past issues here. If you like this newsletter, please share it with your friends and I appreciate it!

In this issue, I cover:

Notable news: OpenAI’s GPT4o, Google’s AlphaFold, Apple’s AI moves

AI at work: a must-read for leaders

How to reduce AI hallucination: RAG and Knowledge Graph

How AI can help address healthcare inequalities

One more thing: find your community for AI learning

Enjoy.

AI News, Curated for You

1. OpenAI introduces GPT4o in its spring update

On May 13, OpenAI revealed its new GPT-4o model, showcasing its ability to perform reasoning across text, audio, and video. You can watch the complete release and live demo video below and explore more demos on this page.

The most interesting capabilities to me are the following:

Low latency in AI response and the ability to interrupt ChatGPT mid-answer: When using English in voice mode, it felt like having a real conversation. I discussed my investment thesis on a stock with ChatGPT-4o while walking my dog. I now anticipate more such brainstorming sessions while doing chores, driving, and walking. Exciting!

Reasoning around objects it ‘sees’: The demo video by Khan Academy’s founder, Sal Khan, and his son, gives a glimpse of what AI-powered education might look like. I am curious about the potential implications for Khan Academy's business model.

Huge democratization of high-quality AI access: This model offers GPT-4-like capabilities to users for free. Many casual users of ChatGPT were dissatisfied with the quality of GPT-3 available through free accounts. I hope this improvement will encourage many more people to use it.

I hope people fully appreciate how much of an improvement this update is for the user experience of AI chatbots. I am sure more interesting use cases will emerge. Meanwhile, OpenAI’s pricing decision might throw yet another curve ball for some other AI products in the market.

2. A big step forward in AI-assisted drug discovery

Google DeepMind released AlphaFold 3, and it can predict the structures not only of proteins but of nearly all the elements of biological life. Depending on the interaction being modeled, accuracy can range from 40% to over 80%, and the model will let researchers know how confident it is in its prediction so researchers can put it to use differently.

3. AI is coming to your phones

According to a Bloomberg report, Apple is close to finalizing an agreement with OpenAI to integrate ChatGPT into its iPhone features. Meanwhile, Apple is also in discussions with Google and plans to announce a major upgrade to its own voice assistant, Siri. (Let's be honest, who uses Siri for more than basic tasks like checking the time, weather, or making a phone call?) While these developments are intriguing, I’m not sure they will drastically change user behaviors, especially after the ChatGPT’s GPT-4o announcement.

AI at Work: a Must-Read Report for Leaders

In my recent conversations, some leaders say their businesses have not started integrating AI yet and hence they believe there is no rush to put in place an AI policy or get the board to adopt an AI governance framework. My question back to them is always: “What if your employees are using AI for work without telling you?”

Microsoft and LinkedIn released an “AI at Work” report last week. With their dominant market shares in office software and recruiting, they present convincing evidence of the inevitability and prevalence of AI usage at work:

Use of generative AI has nearly doubled in the last six months, with 75% of global knowledge workers using it.

78% of AI users are bringing their own AI tools to work (BYOAI)—it’s even more common at small and medium-sized companies (80%).

52% of people who use AI at work are reluctant to admit to using it for their most important tasks.

53% of people who use AI at work worry that using it on important work tasks makes them look replaceable.

The reality implies significant potential risks for businesses on data, privacy, cybersecurity, regulatory compliance, and many other fronts.

Don’t wait. Here are a few things you should do as a leader in the near term:

Develop AI Vision and Implement AI Policies: Create your AI vision and strategy based on your business goals. Establish clear guidelines for AI usage within your organization. This will help manage risks and ensure ethical practices.

Prioritize AI Training: Offer training programs for employees to enhance their AI skills and ensure they use AI tools effectively and responsibly. Only 39% of people globally who use AI at work have gotten AI training from their company.

Monitor AI Usage: Regularly review how AI is being used in your organization to identify any unauthorized or potentially harmful applications. AI power users are 68% more likely to frequently experiment with different ways of using AI, suggesting that guidance is crucial.

Foster a Culture of Transparency: Encourage open discussions about AI usage to build trust and address any concerns employees may have. 78% of AI users are bringing their own AI tools to work, indicating a need for open dialogue and structured policy.

What about talent?

A clear trend towards non-technical yet AI-fluent talent should motivate AI upskilling.

Globally, skills are projected to change by 50% by 2030 (from 2016)—and generative AI is expected to accelerate this change to 68%.

12% of recruiters say they are already creating new roles tied specifically to the use of generative AI.

Head of AI is emerging as a new must-have leadership role—a job that tripled over the past five years and grew by more than 28% in 2023.

Remember, you don’t need to be an AI expert. You only need to be AI fluent to ask critical questions and provide strategic insights infused with your domain expertise. In future newsletters, I will recommend some AI classes for non-technical leaders and directors.

How to Reduce AI Hallucination: An Introduction to RAG and Knowledge Graphs

Making AI's output more accurate and reliable doesn't always require expensive fine-tuning of AI models. One common framework for enterprise AI applications is called Retrieval Augmented Generation (RAG). By walking through the basics of RAG and its advanced version, Knowledge Graphs (KG), I hope to increase your confidence in cost-effective AI implementations for your businesses. This knowledge will also help you ask informed questions and improve your fluency in discussions with technical leaders, as RAG is one of the most frequently mentioned acronyms in business AI systems.

What is RAG?

According to Chia Jeng Yang, founder of WhyHow.AI:

“RAG is a framework for improving model performance by augmenting prompts with relevant data outside the foundational model, grounding LLM responses on real, trustworthy information.”

An easier way to think about it: imagine your AI is like an intern trained in school with generic business and analytical skills. Now, you provide the intern with an internal ‘knowledge base’ and instruct it to use only the numbers, facts, or guidelines from this knowledge base to answer questions or carry out tasks.

Why RAG?

RAG helps make sense of unstructured data common in business environments and adds additional context to ground AI system outputs within guardrails. It is easier to implement and more cost-effective than fine-tuning a foundational AI large language model. RAG is sufficient for common tasks such as customer service chatbots, enterprise information retrieval, and business document generation. Since most large language models have a cut-off date for the data they are trained with, RAG is a practical way to provide dynamic and up-to-date information, improving the accuracy and relevance of AI outputs.

Retool estimates that over 36% of enterprise LLM use cases in 2023 employed RAG techniques. RAG is widely used in AI applications in financial and legal services where accuracy and reliability are critical.

Knowledge Graphs Make RAG Smarter

Simple RAG implementations based on vectors have the shortcoming of being mechanical, seeking answers based on similarity rather than reasoning. An advanced RAG technique called Knowledge Graphs considers relationships between concepts and entities. Using our intern analogy, it's like having an associate who understands the reasons behind a question and knows where to look for the most relevant answer, connecting the dots better than a fresh intern.

May Habib, CEO of the enterprise gen AI platform Writer.com, shared a visualization to illustrate why knowledge graphs (on the right) can further reduce hallucination and improve outcomes of complex tasks compared to simple vector-based RAG (on the left):

Data Processing: Knowledge Graphs map data and document hierarchies based on inherent relationships between concepts, which can be dynamically modified.

Query and Retrieval: Graph-based retrieval considers these concept relationships instead of only text similarity, making it more similar to human thinking.

Answer Generation: More relevant information is sent to the LLM for answer generation, leading to more accurate outputs.

I hope you now feel more confident when you hear about RAG or knowledge graphs and when discussing how businesses can reduce AI hallucinations. Read more here and here.

How AI Can Help Address Healthcare Inequalities

At a recent event in Minneapolis, a senior executive and board director in healthcare services asked how AI could help address healthcare inequalities. Here is an expanded version of my answer. This topic is important because healthcare disparities impact millions, and AI could potentially offer innovative solutions to bridge these gaps.

AI has immense potential to reduce healthcare inequalities by addressing biases and improving access to quality care. A key area where AI can make a big difference is in correcting gender bias in medical research and treatment.

Historically, medical research has often used data from mostly male participants, leading to a gender bias in understanding diseases and developing treatments. AI can help fix this by analyzing large, diverse datasets that better represent the population, including gender differences. This allows AI to find patterns and insights that traditional research methods might miss, leading to more inclusive and tailored treatment approaches.

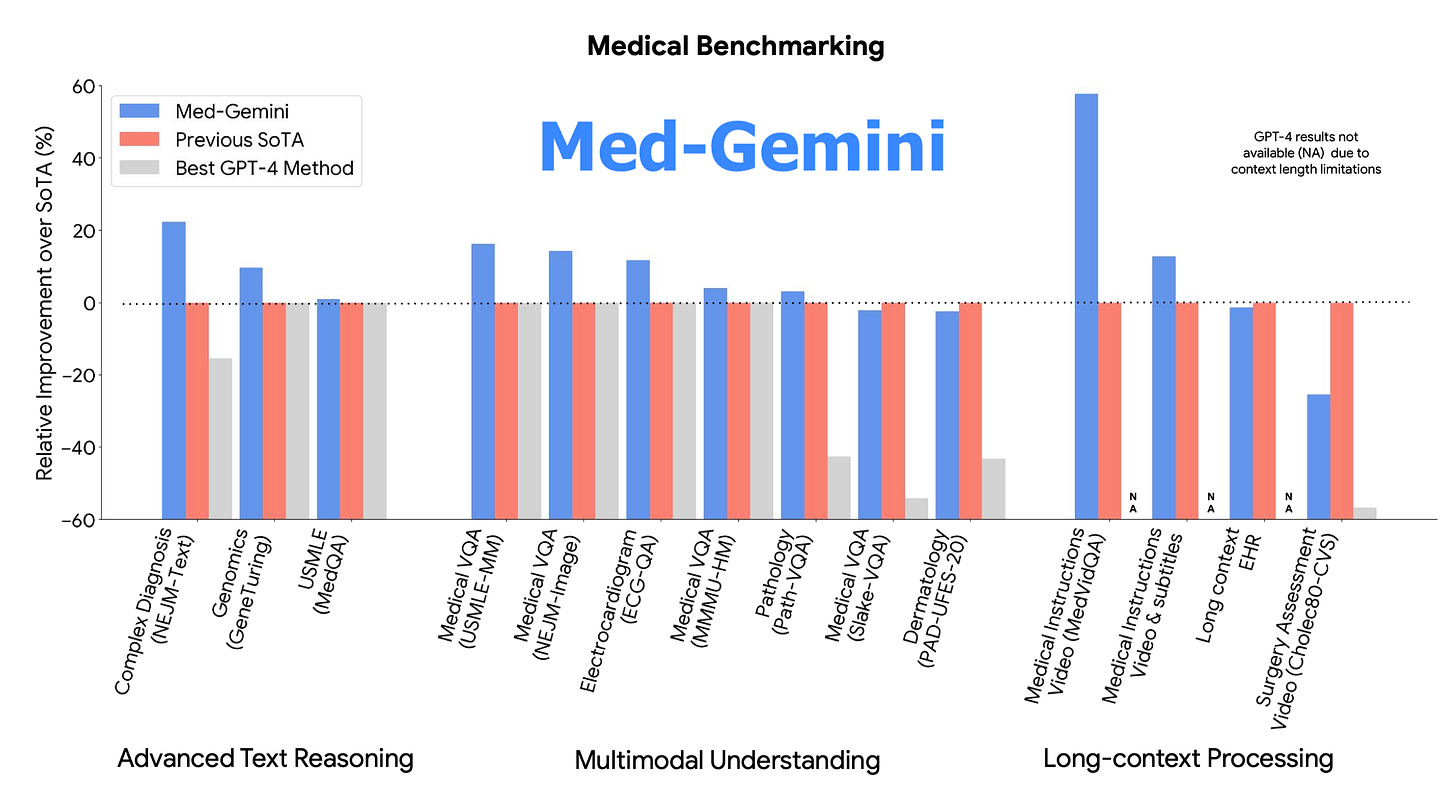

Google's new Med-Gemini AI models are a major advancement in AI for healthcare. These models can process and understand complex medical data, including text, images, and even signals like ECGs. For example, Med-Gemini-2D outperformed other models on several benchmarks, including the New England Journal of Medicine image challenge, by an average margin of 44.5%. This shows that AI can improve diagnostic accuracy and reduce biases in data analysis.

Med-Gemini also excels at analyzing long medical records and research papers, helping doctors understand complex medical data. It can access the latest medical information online, providing up-to-date and accurate responses to medical questions.

AI can also improve healthcare access for underserved communities. Predictive models can identify areas with limited healthcare resources and help prioritize services and outreach efforts. AI-powered telemedicine platforms can offer remote consultations and monitoring, reducing the need for in-person visits and making healthcare more accessible to people in remote or underserved areas, according to research by Lown Institute. For people who only in the past rely solely on internet search for their basic healthcare needs, at least Dr. Google now has a significant upgrade.

Additionally, AI can streamline administrative processes, reducing costs and increasing efficiency, which can make healthcare more affordable. By automating tasks like scheduling, billing, and data entry, AI frees up healthcare professionals to focus on patient care.

However, it’s crucial to ensure the data used to train AI models is diverse and representative, according to Harvard T.H. Chan School of Public Health. Collaboration between healthcare professionals, researchers, and AI developers is essential to fully leverage AI’s potential while minimizing risks. Involving diverse communities in AI development can help ensure these technologies meet the needs of all populations fairly.

AI has the potential to significantly reduce healthcare inequalities by addressing gender biases in research, improving access to care, and streamlining administrative processes. The advancements in AI models like Med-Gemini highlight AI’s transformative potential in healthcare, but careful implementation and collaboration are necessary to ensure these technologies are used equitably and effectively.

One More Thing

One way to stay motivated in your AI learning journey is to find a community that shares your passion and offers mutual support. “Women and AI” is an excellent example of such a community. It’s a hub for women at all stages of their AI journey, from students and early-career professionals to seasoned experts and AI thought leaders. They have podcasts, virtual Saturday socials, and AI startup showcases. Follow them on LinkedIn if you are interested.

What communities are you part of on your journey? Share in the comments or send me a message!

Have a great week.

Joyce Li

I enjoyed the section on AI and healthcare there is so much low hanging fruit in healthcare. Plus, AI can help address equity and inclusion for diverse populations.

Thanks for the shoutout to Women And AI podcast and community we appreciate the call out! We focusing on elevating our community by shining the light on women doing amazing work in AI.

Not having a go at you, but it's fascinating how Bloomberg (or anyone else) would frame things as "AI is coming to your phones". Remembering that Google has been far ahead of Apple in the AI space for many years, knowing that Gemini Pro has been on their Pixel phones for many months now, and that Android phones have apps for ChatGPT, Poe, Pi, and Microsoft's Copilot, the headline feels a little like "Keyboards are coming to your laptops".