#8 How enterprises are adopting and building AI applications

Plus: Sakana AI's Breakthrough, the accidental invention that revolutionized AI, use cases in investment management and healthcare, and more

👋 Welcome back to "AI Simplified for Leaders," your weekly digest aimed at making sense of artificial intelligence for business leaders, board members, and investors. I invite you to explore the past issues here.

You might notice I changed how the title is structured and the email sender is displayed. I am experimenting with a few ideas and appreciate your feedback and patience.

In this issue, I cover:

Sakana AI's breakthrough in automating AI model development

Amazon's $4 billion investment in Anthropic

Stability AI's leadership crisis

The accidental invention that revolutionized AI

How enterprises are adopting and building AI applications

Real-world use cases in investment management and healthcare

For directors: an example of an effective AI ethics policy and a survey paper on navigating security and privacy challenges of large language models

Lastly, I share a personal reflection on finding the music in our writing. Enjoy.

Notable AI News, Curated for You

This week feels unusually quiet compared to last week's whirlwind of significant AI news.

1. Sakana AI Breakthrough: Automating AI Model Development in an Cost-Effectively Way

Japanese startup Sakana AI developed a breakthrough technique called Evolutionary Model Merge. It enables developers to create new AI models by merging parts of existing vast selection of open-source models without the need to spend huge amounts to train and fine tune their own models. There are more than 500,000 models available on Hugging Face and this method aims to provide a more systematic approach to discovering efficient model merges.

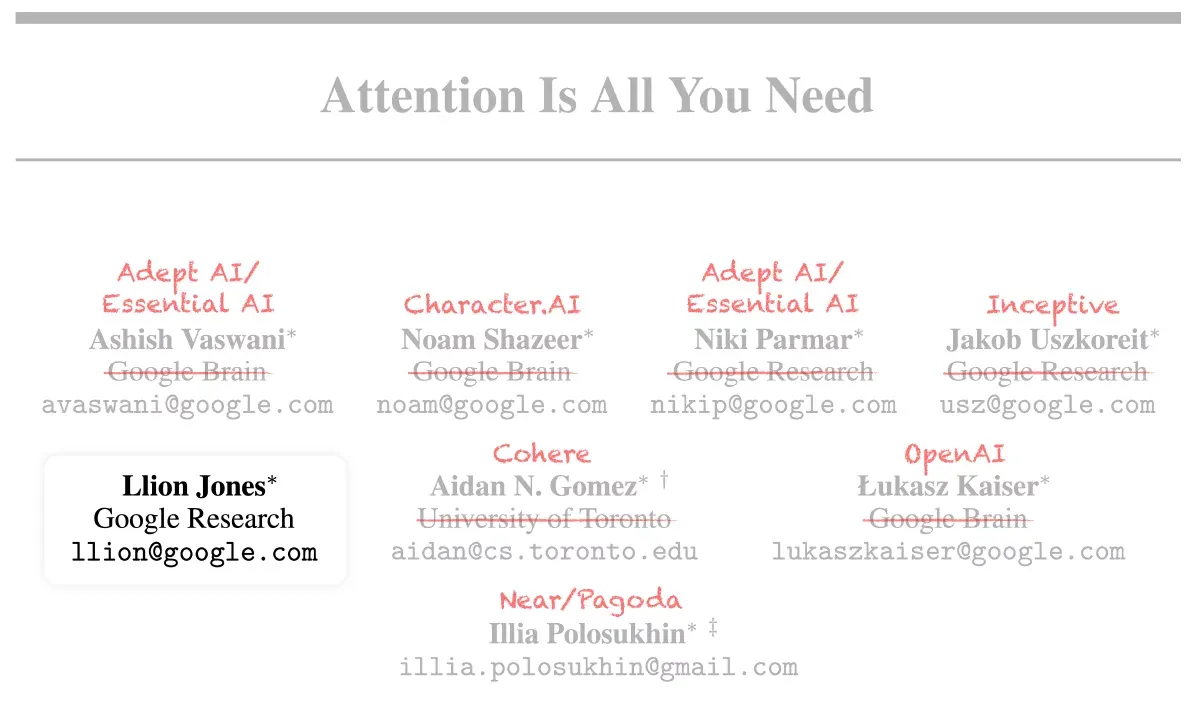

Sakana AI was founded by Llion Jones, one of the 8 co-authors of the “Attention is All You Need” paper and David Ha, the former head of research at Stability AI (more on that company below) and Google Brain researcher. Check out the GlobalVC’s investment thesis on this startup.

2. Amazon Concludes $4 Billion Investment in Anthropic

Amazon has made its largest investment in history, pouring $4 billion into Anthropic, without requesting a board seat (Microsoft has a nonvoting seat on the OpenAI board). Interestingly, a recent a16z report (in the next section) surveying enterprise users reveals that cloud service providers heavily influence model purchase decisions. For instance, Amazon users prefer Anthropic or Cohere models.

3. Stability AI CEO Departs Amid Turbulence

Stability AI, a UK-based AI startup founded in 2019 and known for its open-source AI text-to-video model Stable Diffusion, is facing an existential crisis. Its CEO, founder, and 75% equity owner, Emad Mostaque, stepped down amidst reports of mismanagement and financial strains. While some view this as a sign of the demise of stand-alone AI startups, Stability AI's issues appear to be idiosyncratic and deeply rooted in the tainted credibility of management. This development likely marks the end of Stability AI, which had raised a total of $197 million and was once valued at over $1 billion.

The Accidental Invention That Revolutionized AI

The research paper "Attention is All You Need" is recognized for introducing the Transformer model, a breakthrough that led to the rise of Generative AI and NVidia's impressive market valuation.

Recently, NVidia's CEO, Jensen Huang, interviewed the authors and said, "We have a whole industry that is grateful for the work that you guys did."

The WIRED magazine published an insightful article “8 Google Employees Invented Modern AI. Here’s the Inside Story“ detailing the origins of the paper. It is a story full of serendipitous conversations and brilliant collaborations enabled by the unique Google culture in its earlier days. Everyone went on to create their own companies and continue making their impact in this field. I strongly recommend a read.

How Enterprises Use and Build AI Apps

(A Significant Shift from Six Months Ago)

It's surprising to see many on social media still quoting research reports or surveys from six months ago, suggesting that generative AI remains primarily a consumer phenomenon with chatbots and image generation or that it has not gained real traction among enterprise users beyond simple tasks like meeting notes or document summarization.

This cannot be further from the reality.

My conversations with business leaders clearly indicate a transition from the experimentation phase to the production phase among enterprise users. I'm pleased to see a16z’s report titled “16 Changes to the Way Enterprises Are Building and Buying Generative AI” (March 21, 2024) confirming these observations.

"Though these leaders still have some reservations about deploying generative AI, they're also nearly tripling their budgets, expanding the number of use cases that are deployed on smaller open-source models, and transitioning more workloads from early experimentation into production." - a16z

I find the following changes particularly interesting and impactful, with my comments:

AI investments now fall under recurring software budget lines - this could mean that AI is seen as a business driver instead of one-off cost reducer.

Methods for measuring ROI are emerging but still in the early stages. AI model cost is only 1/3 of the total cost and the right technical talent remains necessary for the 'last-mile' to build and deploy solutions at scale.

Enterprises are careful to avoid AI vendor lock-ins given how fast the field is developing: multiple models, fine-tuning models, open-source, and in-house building applications with APIs that allow future switch of models.

Cloud service providers are the gatekeepers and heavily influence the choice of AI models, especially when model performance is converging. This point and previous point create challenges for stand-alone AI model companies when they count on SaaS type of monetization down the road.

69% of surveyed companies use internal benchmarks to evaluate models, making fine-tuned models for specific use cases more likely to reach or exceed on-the-shelf models in performance at a more attractive cost.

Enterprises are excited about internal use cases but remain more cautious about external ones due to hallucination, safety, and public relations risks - consistent with the use cases in a later section.

These findings underscore the rapid evolution of generative AI in the enterprise landscape. As businesses shift from experimentation to production, they are strategically allocating resources, leveraging open-source models, and focusing on tailored solutions to maximize the potential of generative AI while navigating the challenges of this transformative technology. I recommend you read the entire report for the entire list of 16 changes.

Generative AI Transforming Industries: Use Cases

In my conversations with senior leaders and directors, the focus of questions has shifted from risks (hallucination, security, bias, etc.) to opportunities. How are companies leveraging generative AI to revolutionize their industries? To address this, I highlight use cases from recent news and reports in this newsletter. This time, we spotlight the domains of Investment Management and Healthcare.

Investment Management: Balancing Innovation and Caution

Invesco, one of the ten largest asset managers in the US, is adopting generative AI in operations and compliance while proceeding cautiously when it comes to investment decisions or client interactions.

"As the quantitative team starts to employ better generative AI, it'll help them make better decisions, but turning over the portfolios to gen AI is not something I see in the near-term future." - Andrew Schlossberg, CEO of the $1.6 trillion Invesco

The $20bn hedge fund Balyasny Asset Management in their announcement of LlamaIndex & Databricks Integration this week, praises the positive impact they are witnessing by integrating generative AI applications into idea generation and investment due diligence processes.

I See A Typical Adoption Journey in Investment Management:

Start with productivity improvement: preliminary document drafting, note-taking, and information extraction.

Progress to complex insights and process automation: deriving insights from unstructured and private data, idea generation, investment research, and compliance streamlining.

Maintain human control over core services and client outcomes: portfolio construction and final investment decisions are usually the areas not rushing to adopt AI.

The first stage of adoption, focused on efficiency, may seem simple but should not be underestimated. It delivers immediate benefits in return on investments (ROI). Equally important, this phase, with its internal focus, strict guardrails, and experimental nature, builds trust in human-centered AI solutions. It establishes a critical foundation, encouraging us to explore new possibilities and ask, "What if?" and "Could we...?" questions. This paves the way for the second stage, where a more profound transformation in workflows and business strategies may unfold.

Healthcare: Modernizing Operations and Workflows

Sequoia published an article on generative AI's impact on healthcare. Each year, $300 billion of healthcare spending in the US is allocated to administrative operating expenses, mostly for repetitive, labor-intensive processes. This presents a prime opportunity for generative AI applications.

In healthcare, AI adoption is centered on automating back-office operations and streamlining front-line workflows. Similar to the investment management adoption path, generative AI is yet to be used in critical decisions affecting patient outcomes.

The market map below illustrates the areas where innovations are happening. From patient engagement to revenue cycle management, healthcare is experiencing a significant leap forward in modernization.

This market map also serves as a reminder of a point I made in the past: The ease of building products upon powerful foundational AI models makes differentiation by product alone extremely challenging.

Director's Corner: Diving into AI Ethics Policy and Navigating Privacy and Security Challenges

In a departure from our usual discussions on frameworks, key questions, and regulatory developments in this section, let's take a more technical approach to the topics we've been exploring in the boardroom. Don't worry—we'll return to our regular format next time.

Crafting an AI Ethics Policy: A Template for Content Creators

What does an effective AI Ethics Policy look like? Poynter.com offers an example designed for newsrooms, which can serve as a template for other content creators to adapt. The policy is built upon the principles of accuracy, transparency, and audience trust. When reviewing your company's AI Ethics Policy, focus on these principles and ensure they align with your organization's values and mission.

Navigating the Security and Privacy Challenges of Large Language Models

The paper "Security and Privacy Challenges of Large Language Models: A Survey" provides a comprehensive overview of the vulnerabilities and risks associated with deploying and using Large Language Models (LLMs) across various sectors, including transportation, education, and healthcare. The survey underscores their susceptibility to security and privacy attacks, such as jailbreaking, data poisoning, and Personally Identifiable Information (PII) leakage attacks.

For board directors, the key takeaway is the critical need to balance the benefits of LLMs with the potential security and privacy risks. Implementing robust defense mechanisms and staying informed about emerging threats are essential steps in leveraging LLMs' capabilities while safeguarding against vulnerabilities.

One Last Thing: Hear the Music in Writing

The start of April is Spring Break in the Bay Area and also the season for the final rounds of high-school speech competition tournaments. Who knew that watching my son edit his speeches would make me rethink my business writing?

He sees music in written words: some are smooth, while others are chaotic; some are boring, while others are exciting. When he edits his speech, he listens for the music in his words—the rhythm that engages his audience emotionally and removes the screeching notes that come in the way.

In writing this newsletter, it's easy to get lost in the details—the format, the jargon, the individual word choices. My son's approach taught me to focus on the flow, the music that carries the reader from start to finish.

🎼 Can you hear the music in your writing?

Thank you for reading. Enjoy your Spring.

Joyce Li

A good read, Joyce! Much agree with your views towards application of AI to investment management (useful for operations). I can also feel the pulse in your writing - all the best to your son's competition!