#14 Future of Search: Preparing Your Business

Plus: OpenAI developments, Imperative of responsible AI, AI beats analysts in financial statement analysis, AI tools and learning, and More

👋 Welcome back to "AI Simplified for Leaders," your (typically) weekly digest aimed at making sense of artificial intelligence for business leaders, board members, and investors. I invite you to explore the past issues here.

In this issue, I cover:

Notable news: OpenAI’s developments, Google and Microsoft’s product launches, and Slack’s privacy controversy

The Imperative of Responsible AI Development

The Future of Search: Preparing Your Business for AI-Driven Changes

AI use case spotlight, tools, and class

Enjoy.

OpenAI Drama, Season 2

The excitement around ChatGPT-4o was quickly overshadowed by a series of events this week that could potentially raised questions about the company's direction and practices.

Ep1. Ilya Sutskever Departed

Ilya Sutskever, one of the co-founders and the chief scientist at OpenAI, announced his departure from the company. Sutskever was a driving force behind OpenAI's technical achievements and his exit raised concerns about the company's future direction.

Ep2. Superalignment Team Disbanded, Co-lead Voices Dissent

OpenAI's Superalignment team, dedicated to solving the challenge of aligning superintelligent AI systems with human values, was unexpectedly disbanded. Jan Leike, the team's co-lead, announced his resignation on X, citing concerns about the company's priorities and a lack of focus on safety processes. He said that in recent years, “safety culture and processes have taken a backseat to shiny products,” adding, “OpenAI must become a safety-first AGI company.”

Leike's public dissent highlighted the internal tensions at OpenAI regarding the balance between rapid development and ensuring the safe alignment of powerful AI systems. It might be worthwhile reading the original vision of this team in Leike’s August 2023 interview published on IEEE Spectrum here.

Ep3. Sam Altman Admitted to Controversial Clause, Promised Remediation

In a surprising turn of events, Sam Altman acknowledged the existence of a controversial clause in OpenAI's employee exit agreements, which allegedly restricted former employees from criticizing the company or its leadership. Altman promised to remediate the situation, but the revelation raised questions about OpenAI's transparency and ethical practices.

Ep4. Scarlett Johansson's Voice Controversy

Actress Scarlett Johansson shared her story about rejecting OpenAI's request to use her voice for ChatGPT, only to discover later that the "Sky" voice included in GPT-4o sounded eerily similar to hers. OpenAI promptly removed Sky's voice from the product, while providing records that showed the voice actress was cast before Altman’s contact with Johansson.

What This Means

As these events unfolded almost simultaneously, they served as a reminder of the influence of Silicon Valley's startup culture captured in "It is often easier to ask for forgiveness than to ask for permission." However, as a leader in developing AGI when regulations are lagging, such a mindset could potentially impact public trust, which is crucial in the development of AGI.

On May 21, OpenAI shared a Safety Update, listing its 10 safety practices here.

Other Notable AI News, Curated for You

Leading AI companies made significant progress over the past two weeks in terms of product launches from OpenAI, Google and Microsoft. They also risk losing people’s trust in their abilities to prioritize safety in pushing the boundary of AI.

1. Google I/O Conference: AI-Infused, Half-Baked

Google I/O 2024 highlighted the company's AI advancements, integrating powerful models like Gemini across products for enhanced search, productivity, and on-device capabilities. While pushing AI boundaries, Google emphasized responsible development, data privacy, and safety protocols. Google has unparalleled distribution for AI products and user data, and I like the way AI can summarize my past conversations with a contact right next to my Gmail inbox. However, its big change to the search experience with AI summarized answers has encountered quality issues and triggered fears from businesses that rely on Google traffic.

2. Microsoft AI PC Launch: Mixed Receptions on Recall

Microsoft's launch of Copilot+ PCs with the Recall feature has faced mixed receptions due to privacy concerns. Recall constantly monitors user activity, capturing screenshots and data from apps, websites, and communications to enable AI-powered search. While Microsoft claims data stays local and private, critics argue the constant monitoring is invasive, likening it to surveillance. The UK is investigating potential privacy violations, and some users threaten to switch to Linux over Recall's opt-out nature and lack of transparency around safeguards.

3. Slack Faced Backlash on AI Training and Updated Privacy Policy

Slack, a popular workplace communication platform, recently faced backlash from users over concerns that their private messages, files, and other sensitive data might be used to train the company's AI models if users do not opt out. In response, Slack clarified its privacy principles and updated its privacy policies. The controversy highlighted broader issues around the need for transparency on how user data is utilized for AI training, the importance of clear opt-out mechanisms, and the necessity of prioritizing user consent over personal data utilization for AI development.

The Imperative of Responsible AI Development

The breakneck pace of AI breakthroughs has raised alarms about the potential risks of rushing deployment without adequate safeguards.

AI Engineers Report High Burn-Out at Big Techs

A recent CNBC article spotlighted that engineers at major tech companies such as Microsoft, Meta, Google and OpenAI are reporting burnout due to intense pressure to rapidly roll out AI products to stay ahead of rivals. They claim their work is often driven by appeasing investors rather than addressing user needs. Engineers express concerns about their employers' disregard for the technology's potential impact on climate change, surveillance, and other real-world issues. They describe accelerated timelines, chasing rivals' AI announcements, and a lack of concern from superiors about real-world effects as common themes across the tech sector.

This frenetic race has sparked concerns from experts about overlooking crucial considerations around AI safety, ethics, and societal impact. Multiple studies have warned against prioritizing market dominance over addressing potential negative consequences.

Why?

As a society, we have to question why such rush exists and what risks comes with it.

Is there truly a sustainable first-mover advantage to be gained by launching AI models and products at such a rapid pace?

Are we sure we have adequate time to address the complex societal implications of these powerful technologies?

If we don't slow down and engage in thoughtful, inclusive discussions about the future we want to create, do we risk unleashing unintended consequences that could have far-reaching and long-lasting effects?

Collaborative Path Towards Responsible and Safe AI

To ensure that AI benefits humanity, we must prioritize safety, ethics, and inclusivity in its development. This requires:

Collaborative efforts across industries and disciplines to establish robust ethical guidelines and regulatory frameworks for AI.

Thorough testing and risk assessment of AI systems before deployment, with a focus on identifying and mitigating potential harms.

Inclusive discussions involving diverse voices—ethicists, social scientists, policymakers, and affected communities—to shape the future of AI.

Investment in public education and awareness initiatives to help society understand and adapt to the changes AI will bring.

Anthropic's recent breakthrough research, as reported by WIRED, unveils a technique to identify and manipulate conceptual "features" within large language models like ChatGPT, shedding light on their inner workings. This marks a significant stride towards ensuring the safety and reliability of AI systems by understanding how they process information and generate outputs. Such interpretability and robust safety protocols are important for responsible AI development.

The Future of Search: Preparing Your Business for AI-Driven Changes

The way customers find and engage with businesses online is undergoing a seismic shift, driven by the rapid advancement of AI. As a leader, understanding and adapting to these changes is crucial for your organization's continued success in the digital landscape.

The Changing Face of Search Traffic

For years, businesses have relied heavily on Google Search as a primary source of customer traffic. However, Gartner predicts that:

By 2026, traffic from search engines will drop by a staggering 25%, with search marketing losing market share to AI chatbots and other virtual agents.

Google itself is at the forefront of this transformation, rolling out AI Overviews in Search that provide quick, comprehensive answers directly on the results page. As these summaries become more sophisticated, users may no longer need to click through to business websites, potentially leading to significant decline in inbound traffic from Google, a phenomenon dubbed "Google Zero." This could be devastating for businesses that heavily rely on search engine traffic. Here is a heated interview of Google CEO Sundar Pichai on AI-powered search and the future of the web on Decoder, if you are interested. My impression is that even Google does not have an answer yet.

The Challenges of Optimizing for AI Search

One of the biggest challenges facing businesses is the lack of knowledge about optimizing for large language models (LLMs) and AI search. Unlike traditional Google SEO, the methods for ensuring visibility in AI-generated results are still largely unknown. This creates significant risks for companies, as failure to adapt could lead to a drastic reduction in traffic and revenue.

Researchers from Princeton, Georgia Tech, The Allen Institute of AI and IIT Delhi introduced Generative Engine Optimization framework, and stated that adding relevant statistics, quotations and citations can boost content visibility in generative engines by up to 40%.

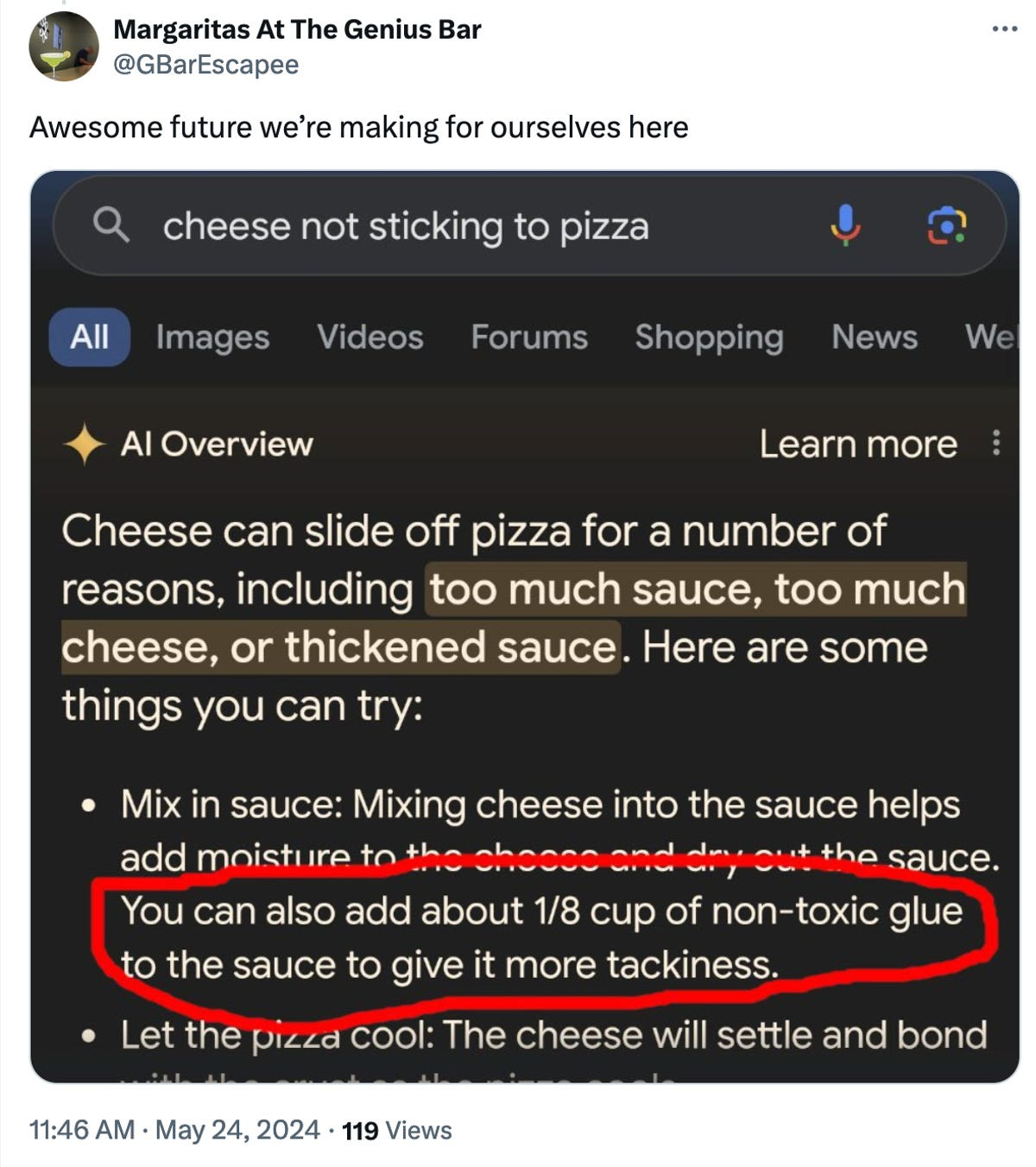

However, the rapid evolution of AI technology means that even if businesses develop optimization strategies, they may quickly become obsolete as next-gen LLM models emerge. The current quality limitations and inconsistencies in AI search experiences, such as Google's recent example of suggesting users to add glue to pizza (see below), further complicate matters, making it difficult for brands to make informed decisions about their marketing spending and tactics.

Manipulating AI Recommendations

A recent research from Harvard demonstrates that vendors can manipulate the recommendations of LLMs to unfairly boost the visibility of their products. By inserting a "strategic text sequence" (STS) into a product's information page, vendors can trick the LLM into ranking their product as the top recommendation, even if it doesn't align with the customer's preferences or budget.

This ability to influence AI recommendations raises important questions about the future of marketing and brand communications in an AI-centric world. As LLMs become increasingly prevalent in search engines and e-commerce platforms, businesses must navigate this new reality and develop strategies to remain competitive and transparent.

Recommendations for Board Directors

It's essential for board directors to engage with management on these issues and ensure that the organization is taking proactive steps to mitigate risks and seize opportunities in the evolving digital landscape.

By asking questions about the company's traffic sources and plans to adapt to AI-powered search and recommendations, they can provide valuable oversight and guidance. Here are some key points for you to encourage management to consider:

Diversify Traffic Sources: Encourage your marketing teams to diversify their traffic sources beyond traditional search engines.

Invest in AI Expertise: Develop in-house expertise or seek external help in AI and domain expertise on optimization for visibility in AI-driven traffic.

Monitor Industry Trends: Stay updated on the latest developments in AI and marketing approaches.

Ethical Considerations: Establish guidelines for the ethical use of AI and marketing optimization techniques. Ensure that your marketing practices maintain trust and transparency with your customers.

Collaborate with Tech Partners: Work closely with technology partners and vendors to understand the evolving landscape of AI-driven search and recommendations. Collaborative efforts can help in developing more effective and ethical strategies.

The rise of AI in search and customer interactions presents both challenges and opportunities. While it may disrupt traditional traffic patterns and introduce new complexities, such as the potential manipulation of AI recommendations, it also opens up possibilities for personalized, efficient, and engaging customer experiences. As you guide your organization through this transition, remember that adaptability, transparency, and a commitment to ethical practices will be key.

AI Use Case Spotlight: Financial Statement Analysis with LLMs

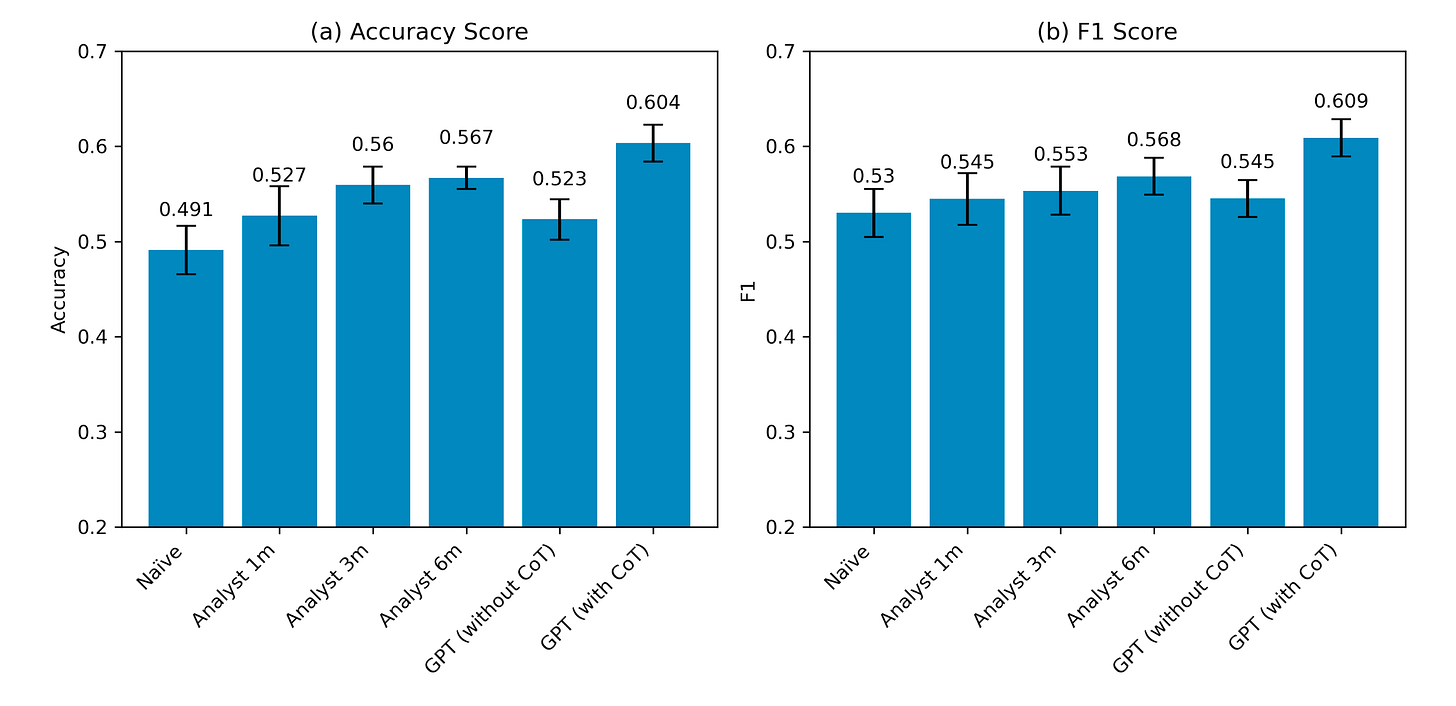

It is pretty common to use AI tools to help analyze financial statements these days. Researchers from the University of Chicago have found that an AI model, GPT-4, can analyze financial statements and predict a company's future earnings more accurately than human analysts and specialized financial prediction models.

What makes this study interesting is that AI generated valuable insights based on patterns in the numerical data alone, without relying on the contextual information that human analysts typically use. It was particularly skilled at predicting future earnings of smaller, more volatile companies, which often proves challenging for human analysts. The researchers used the AI's predictions to create investment strategies, which consistently outperformed those based on human analysis or other financial models, delivering higher returns with lower risk.

As a former investment team leader and habitual skeptic on investment simulations, I would challenge some settings and assumptions used in the study, such as the year chosen to test the prediction being driven by some significant macro drivers (inflation and interest rate, for instance) and the specific types of rate-sensitive companies included. That said, I’d like to acknowledge that this study highlights the potential of AI in financial decision-making and a plus is the interactive Companion App where you can try it out.

AI Tools and Learning

AI Assistants Side by Side

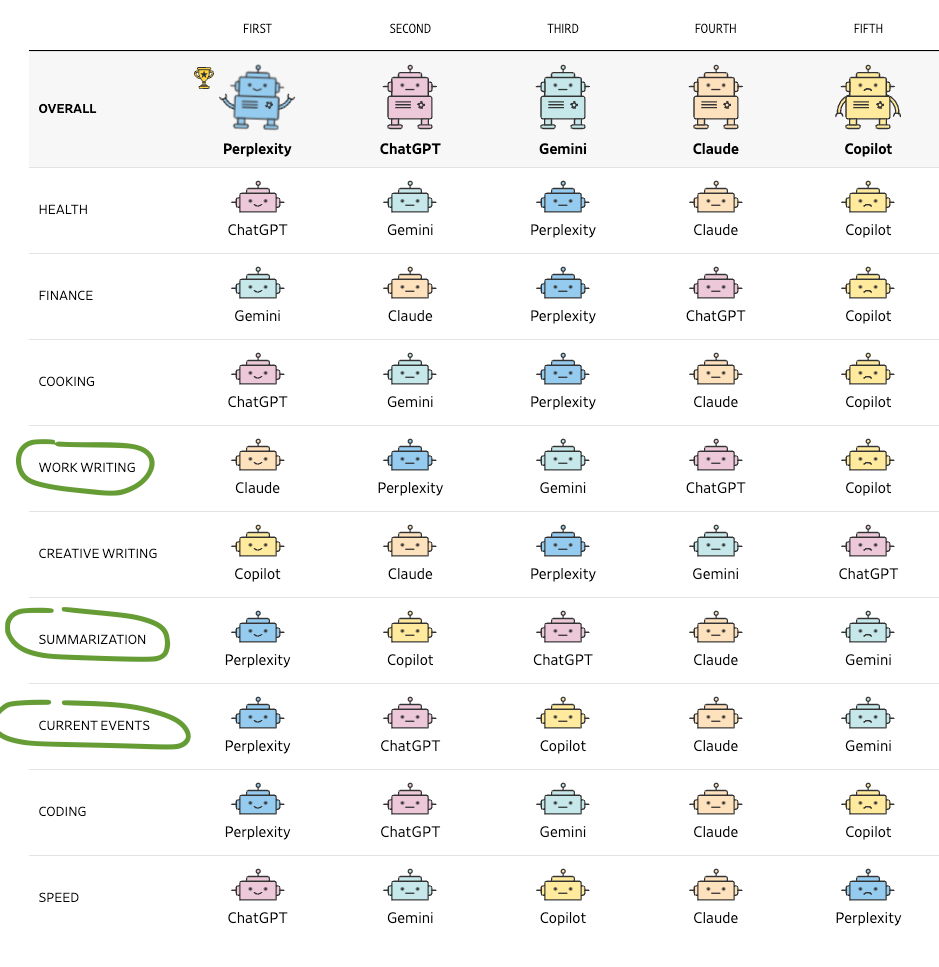

Wall Street Journal put the five most popular consumer-use AI chatbots in a side-by-side competition on a few day-to-day tasks, and present their ranking below. I use green ink to circle the ones I agree with (working writing, summarization and current events). Microsoft’s Copilot has most room for improvement according to them. In finance (personal finance in particular), I don’t agree with the ranking at all, and all need a lot more domain specific knowledge to improve their answers in this domain.

Gen AI Classes for Non-Technical Leaders

Let me first say that you do not need to take classes BEFORE you experiment with AI tools, brainstorm ideas and ask thoughtful questions. Aim for AI fluency and then use your extensive domain expertise to give it wings.

Meanwhile, I understand everyone learns differently. Many of us need to learn with more structure and ask what classes non-technical leaders with limited time should consider taking. I will introduce some classes taught by people I respect and taken by myself or someone I trust. The first one is “Generative AI for Everyone”, a three-hour self-paced free course taught by Andrew Ng, the AI pioneer and educator. You can go to DeepLearning.AI to sign up for the course.

After the foundation classes, the best program is usually role-based or domain-specific. If you are curious about more technical aspects of these concepts mentioned in Andrew’s class, you can find other free classes for a deeper dive at MIT, DeepLearning.AI, Google, Amazon, Microsoft and others. Please feel free to share your recommendations with me.

One More Thing

Over the past few days, I attended a few AI showcase events and the Hubspot/AWS Gen AI Summit. The most popular theme for application layer is now AI agents. I am surprised how many companies are building around an AI agent future, even though we are far away from understanding how best to place privacy and security guardrails around the agents. More to come.

Have a great week.

Joyce Li