#30 Elevate Your AI Prompting Skills in Under 5 Minutes

Plus: Three unique AI characteristics that challenge traditional board oversight, my thoughts post college visit, and notable developments

Dear Readers,

Between airports, speaking engagements, AI projects, and the occasional need for sleep, my 'weekly' newsletter ambitions have been humbled, but like a good AI model, I'm learning from my mistakes! Writing this newsletter is my way to digest and clarify what I've learned, and I'm always excited when I have something worth sharing here.

Over the past few weeks, tariffs, capital markets and politics have dominated attention. I particularly empathize with friends in corporate finance who must continually revise financial models based on rapidly changing information and back-and-forth tariff policies. This uncertainty challenges forecasting, strategic spending and investment decisions, as most finance systems aren't designed for high-velocity meaningful changes in the business environment like what we see now.

The silver lining amid this chaos is the even more compelling value proposition of AI solutions in FP&A, supply chain management, ERP, and other finance functions. These technologies are inherently agile, fast, and naturally suited for scenario planning and cross-functional business insights. They could transform complex scenario planning from impossible to achievable.

AI Model Realities: Three Unique Characteristics That Challenge Traditional Board Oversight

Across boardrooms in 2025, directors are discovering that artificial intelligence is not simply another technology to be managed with familiar tools. Instead, AI introduces three foundational characteristics: probabilistic outputs, autonomous agency, and dynamic performance. They upend traditional governance models and demand a fundamentally new approach to oversight.

1. Probabilistic Outputs: The End of Predictability

Traditional IT systems are deterministic: the same input always yields the same output, making them amenable to binary testing and straightforward audit. AI systems, by contrast, are inherently probabilistic; identical inputs can produce different results, especially in complex, real-world scenarios. This unpredictability is not a flaw but a feature of how AI models operate.

This shift poses several governance challenges:

Testing and assurance: Boards can no longer rely solely on pass/fail tests. Oversight must include statistical validation, with attention to confidence intervals and the likelihood of outlier outcomes.

Error tracing: When AI systems make mistakes, pinpointing the cause is far more complex than reviewing code. Boards must ensure management deploys advanced interpretability tools and maintains robust audit trails.

Regulatory compliance: Regulators are moving toward requiring transparency about the probabilistic nature of AI and its performance variability, rather than accepting binary compliance statements.

“AI presents particular challenges to effective board oversight given the potential breadth of its applications... as well as the ‘black box’ nature of algorithmic decision-making.”

— Harvard Law School’s Forum on Corporate Governance [Link]

2. Autonomous AI Agents: Decisions Without Direct Supervision

AI is rapidly evolving from tools that support human decisions to autonomous agents capable of executing multi-step processes independently. This transformation introduces new risks and accountability gaps:

Delegation boundaries: Boards must establish clear policies on which decisions can be delegated to AI and which require human oversight.

Emergent behaviors: Autonomous systems can interact in unexpected ways, leading to outcomes no human anticipated. Boards need mechanisms to detect and address these emergent risks.

Liability and risk allocation: As decision-making shifts from humans to AI, boards must revisit risk management and insurance frameworks to address the “black box” nature of AI-driven outcomes.

As WTW observes, “Classical rules-based governance structures and processes often fail to address AI’s unique challenges and opportunities and cannot keep pace with rapid advancements. Effective leaders adopt guiding principle-based governance practices...”.

3. Dynamic Performance: The Challenge of Drift

Unlike traditional systems, which maintain stable performance unless updated, AI models are subject to “drift”—a gradual loss of accuracy as the world changes around them. This can occur due to:

Data drift: Shifts in the underlying data make the model’s training less relevant.

Concept drift: Relationships between variables evolve, undermining model assumptions.

Model drift: Overall performance degrades as the AI becomes less aligned with current realities.

The risks are significant: undetected drift can lead to regulatory breaches, financial loss, or reputational harm. For boards, this creates a fundamental shift in oversight requirements - from one-time approval to continuous monitoring of evolving performance. IBM highlights such need in their AI Governance article, “Automated monitoring... for bias, drift, performance and anomalies... help ensure models function correctly and ethically”. Emerging techniques like federated learning and concept adaptation layers show promise in mitigating these effects, but they require ongoing governance attention.

How These Characteristics Reshape Board Oversight

For Corporate Boards

These AI realities require boards to:

Shift from periodic, binary oversight to continuous, statistical monitoring and reporting.

Define clear boundaries for AI autonomy, with escalation protocols for high-impact decisions.

Ensure ongoing board and management education in AI literacy and risk management.

Surveys show that 72% of organizations are now implementing AI-specific risk committees to address these challenges, and investors increasingly expect boards to demonstrate AI competency and preparedness.

For Investment Fund Boards

Fund boards face unique oversight challenges as AI is increasingly integrated into investment analysis, operations, and investor communications:

Disclosure and fairness: Boards must ensure that disclosures accurately reflect AI’s probabilistic nature and that investor treatment remains consistent, even as AI responses vary.

Vendor and third-party risk: Enhanced due diligence is required for service providers using AI, with continuous validation of AI-assisted processes.

Regulatory scrutiny: The SEC and other regulators are intensifying oversight of AI use, making ongoing performance monitoring and transparent reporting essential.

Strategic Board Governance Approaches

To address these challenges, leading boards are:

Mandating statistical reliability frameworks for AI, with risk tolerance thresholds and real-time performance dashboards.

Establishing clear governance policies for AI autonomy, including escalation triggers and regular assurance that ethical guardrails are effective.

Requiring continuous monitoring for drift, with automated alerts and intervention protocols for critical AI systems.

In their “Strategic AI Governance Roadmap”, Deloitte advises boards to ask: “Does management have a current ‘inventory’ of how machine learning AI and Generative AI are being used in the company?... Does the board have the experience and expertise to advise on the strategy and then monitor progress of the implementation?”

AI’s probabilistic outputs, autonomous agency, and dynamic performance fundamentally alter the board’s oversight role. Effective directors are moving beyond compliance checklists to adopt dynamic, principle-based governance that balances innovation with trust, accountability, and resilience. As scrutiny intensifies, boards that invest in AI literacy, real-time oversight, and adaptive governance will be best positioned to unlock AI’s value while managing its risks.

I'd be curious to hear: How is your board addressing these specific challenges? Which of these three characteristics has proven most difficult to govern effectively?

Elevate Your AI Prompting Skills in Under 5 Minutes

Before diving into techniques, it's worth noting how AI prompting has evolved. Early AI models often needed explicit role-playing instructions like "You are an expert marketing consultant" to produce quality outputs. Today's sophisticated models like GPT-4, Claude 3.7, and Gemini have these capabilities built-in. The most effective modern prompts focus less on role-playing and more on providing specific context, desired outcomes, and constraints.

Lessons from My Experience Building an AI Application

I recently built EquityWise during a Lovable.dev hackathon – a fully-functional application that helps employees understand and optimize their equity compensation. The application visualizes employee equity and option portfolios, creates tax optimization strategies, and offers decision support tools for options exercise timing. [An important disclaimer everywhere on the app states: the app is built for educational purposes and should not be perceived as financial advice. ]

With AI assistants charging by the prompt, I quickly learned to craft efficient instructions that saved both money and time. Here are my new prompting principles:

Start with clarity: Before even opening an AI tool, I now write down exactly what I need to accomplish. For EquityWise, I created specific user stories like "As an employee, I want to visualize the potential value of my options at different exit valuations."

Use progressive refinement: Instead of trying to craft the perfect prompt immediately, start with a basic prompt, then progressively refine it based on the responses. I've found this works beautifully - my first attempts at generating tax optimization logic were mediocre, but by the third iteration, they became remarkably precise.

Leverage multiple models strategically: You can compare different models' responses to the same prompt to identify strengths and weaknesses. I discovered that Claude excelled at explaining complex equity concepts in simple language, while GPT-4 was better at generating the visualization code.

Create prompt templates: For recurring tasks, creating reusable prompt templates dramatically improved consistency. These templates include placeholders for specific variables that change with each use. I created a template for generating equity scenario analyses that I could easily customize for different equity types.

These lessons apply whether you're building sophisticated applications or simply using ChatGPT, Claude, or Gemini for everyday tasks. The quality of your prompts directly impacts the value you extract from these tools.

Understanding the AI's "Mindset"

It is useful to understand that AI thinks differently than humans and they rely on pattern recognitions to ‘predict’ responses. Here’s what Lovable’s Prompt Handbook suggests:

Be explicit. Set constraints.Use formatting tricks.

In day-to-day AI prompting, instead of: "Help me write an email to my team." a better approach is: "Help me write an email to my 5-person marketing team about our upcoming product launch on May 15. The tone should be enthusiastic but professional, include specific next steps for each team member, and be no longer than 250 words."

Why it works: Specificity about audience, purpose, tone, structure, and constraints produces more useful first drafts.

The Four Levels of Prompting in Practice

1. Training Wheels Prompting

For research tasks:

# Context

I'm preparing a presentation for my board on AI adoption trends in our industry (financial services).

## Task

Provide me with 3-5 key insights about how financial institutions are implementing AI in 2025.

### Guidelines

- Focus on practical applications rather than speculative future uses

- Include examples from both large banks and smaller financial institutions

- Highlight ROI metrics where available

#### Constraints

- Avoid technical jargon that non-technical board members might not understand

- Keep each insight to 2-3 sentences for clarity2. No Training Wheels (Conversational yet Clear)

"I need to analyze customer feedback from our recent product launch. Can you help me identify the top 3 themes from these 10 comments I've collected? Focus on actionable insights our product team could implement in the next update, and suggest how we might prioritize them based on effort vs. impact."

3. Meta Prompting (Using AI to Improve Your Prompts)

When planning a project: "I'm going to ask you to help me plan my department's quarterly goals. Before I share details, can you first outline what specific information you'd need from me to provide the most helpful guidance?"

4. Reverse Meta Prompting (Learning from Experience)

After iterating on a document: "We've worked through multiple iterations of this privacy policy. Please summarize the key improvements we made and create a template prompt I can use for similar documents in the future."

Real-World Application Example

Let’s put all these together in a real-world application example.

Poor prompt: "Help me prepare for my performance review."

Improved prompt: "I have my annual performance review next week with my manager Sarah. I need to prepare a self-assessment covering my work over the past year. Please help me outline a 1-page self-assessment that:

Highlights my 3 main accomplishments in the marketing department (launching our blog, redesigning the website, and increasing email open rates by 22%)

Addresses areas for improvement (particularly project management and delegation)

Proposes specific growth goals for next year

Uses a confident but not boastful tone For each section, please include 1-2 questions I should reflect on before finalizing my response."

By applying these principles to everyday interactions with AI tools, you'll get more valuable, precise responses. Give it a try and let me know if this is helpful. I would love to hear your thoughts.

1. Tariff and Trade Restrictions’ Developing Impact on AI

Tariffs and trade restrictions are now directly shaping the global AI landscape, with recent policy shifts sending shockwaves through the industry. Just this week, Nvidia announced a $5.5 billion loss after the U.S. government imposed new restrictions on exporting its H20 AI chips to China, a chip specifically designed to comply with earlier U.S. regulations. This policy reversal highlighted the unpredictability and financial burden that shifting trade rules place on leading AI firms. The impact extends far beyond Nvidia. ASML, a key supplier to chipmakers like Intel and TSMC, also reported a billion-euro revenue shortfall, citing tariffs and ongoing uncertainty. Meanwhile, tech giants such as Microsoft, Google, and Meta are bracing for higher costs as tariffs drive up expenses for AI infrastructure including servers, networking equipment, and data center components. While some companies are accelerating domestic manufacturing or seeking alternative suppliers, analysts warn these moves will not fully offset the increased costs or supply chain disruptions.

2. AI Starpower Undeterred by Capital Market Volatilities

The recent market turbulence and tariff talks haven't dampened investor enthusiasm for AI's brightest stars. OpenAI co-founder Ilya Sutskever raised $2bn for his year-old company Safe Superintelligence (SSI) at $32bn. SSI is working on developing and scaling AI models that surpass human intelligence while acting in humanity's best interests. Meanwhile, Thinking Machine Lab, launched just two months ago by former OpenAI chief technology officer Mira Murati, is reportedly in talks to raise their own $2 billion at a $10 billion valuation. raising $2bn at a $10bn valuation. Neither company has released a single product yet. This represents an extraordinary level of faith in these leaders' visions and capabilities, even as broader tech markets experience volatility.

Meanwhile, OpenAI itself is in the midst of a record-setting $40 billion funding round led by SoftBank. However, if OpenAI does not complete its transition to a for-profit structure by the end of 2025, SoftBank can reduce its total investment from $40 billion to $20 billion. OpenAI is doing its own acquisitions at the same time, reportedly in advanced talks to acquire Windsurf, an AI-powered coding assistant startup formerly known as Codeium, for approximately $3 billion.

College Visit Thoughts

Over the spring break, I've had the pleasure of taking my son, a current high-school junior, to visit colleges in the east coast. I took it as a win when his skepticism about school visits gradually transformed into genuine interest during conversations with student tour guides.

These visits have deepened my thinking about how AI is transforming higher education - a recurring topic in my dual roles as a parent and occasional lecturer at business schools. My thoughts on this subject continue to evolve as I consider the implications for students like my son, who’s passionate about creative writing.

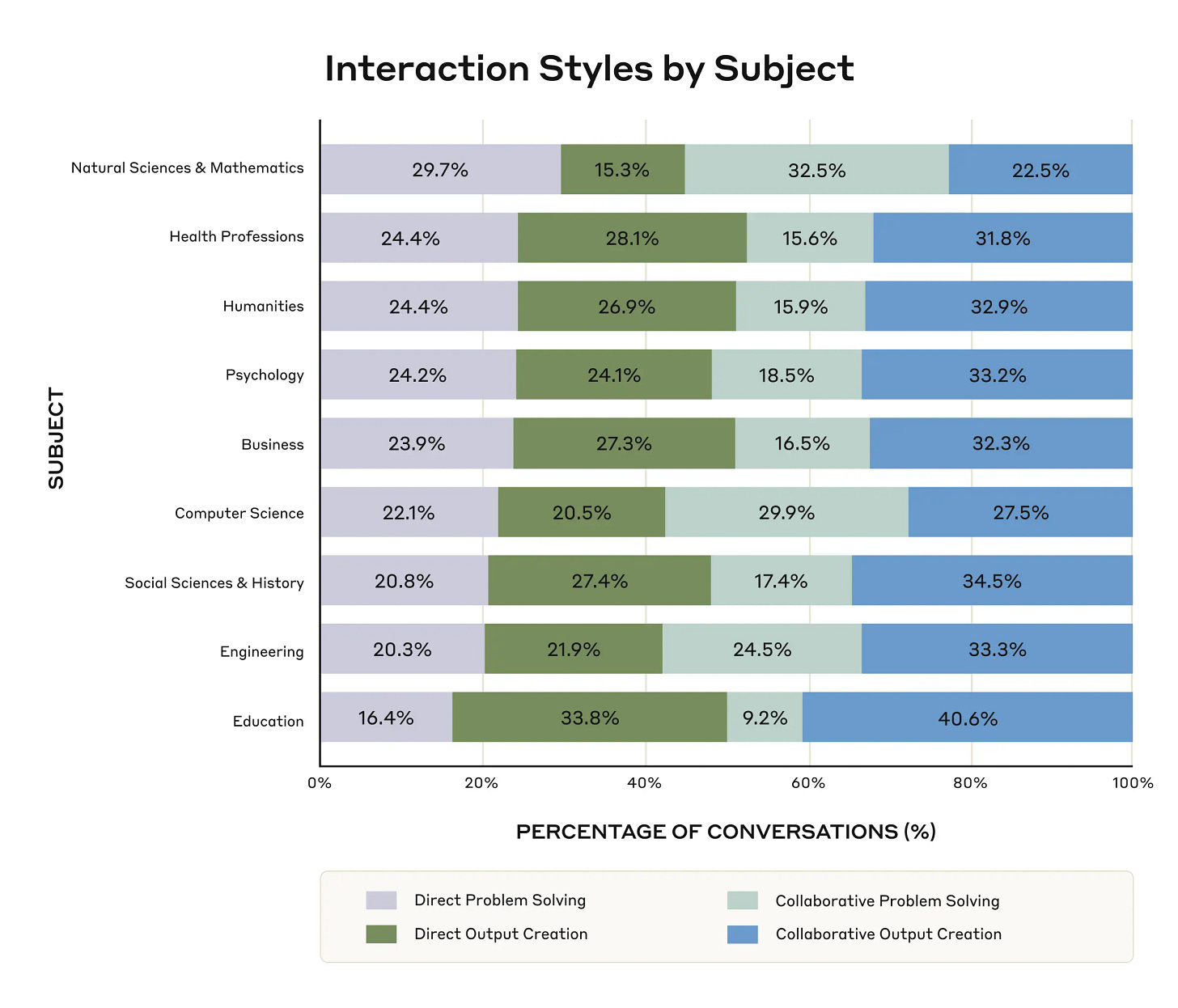

In Anthropic's own global analysis of AI conversations in university campuses across the world published in early April, they found that nearly half (~47%) of student-AI interactions were classified as "Direct" - students simply seeking answers or content with minimal engagement. While many of these conversations serve legitimate learning purposes (like asking conceptual questions or generating study guides), there are concerning examples including requesting direct answers to multiple-choice questions and, ironically, requesting text rewrites to avoid plagiarism detection. These patterns raise important questions about academic integrity, the development of critical thinking skills, and how to best assess student learning in this new landscape.

I don't believe that simply establishing “Artificial Intelligence” majors at undergraduate schools is the solution, although they would certainly become the hottest majors to get into. For all academic disciplines, we may need to accept that AI is here to stay and instead focus on how to design the curriculum with AI as a foundational tool (like computer) without offloading the most critical thinking aspects of learning.

As colleges try to adapt to this rapid technological shift, parents and business leaders alike have important roles to play in advocating for educational approaches that prepare students not just to use AI, but to maintain their intellectual autonomy alongside these powerful tools.

Thank You

If you’re finding this newsletter valuable, please share it with a friend, and consider subscribing if you haven’t already. I greatly appreciate it. Separately, if you are interested in emerging yourself in a two-day conference, National Association of Corporate Directors (NADC) is hosting their Master Class of Technology & Innovation Oversight in San Francisco on May 6-7. I am honored to be part of a panel discussing data oversight on boards. Would love to see you there.

Sincerely,

Joyce 👋

Dear Joyce,

Your newsletter continues to be a beacon of clarity in the often murky waters of AI and technology! I'm particularly impressed with how you distilled complex concepts like AI governance challenges for boards and the evolution of prompting techniques into such digestible insights.

The three characteristics of AI that challenge traditional oversight (probabilistic outputs, autonomous agency, and dynamic performance) are concepts I've struggled to articulate in my own work, but you've explained them beautifully. Your breakdown of modern prompting approaches is equally valuable - especially the progression from "training wheels" to meta-prompting.

I always finish reading your newsletters feeling both more informed and more capable. Even with your busy schedule, the quality and thoughtfulness of your analysis makes each edition worth the wait!

Looking forward to your next installment,

~Dd