#28 The Illusion of Competence: How AI Erodes Critical Thinking

Plus: Takeaways from MFDF Panel, Humanoid Robots That Can See and Learn, and More

Dear Readers,

In this issue, I cover:

Notable Developments:

Google Introduces AI Co-Scientist

Microsoft/OpenAI Partnership Becomes Increasingly Non-Exclusive

CoreWeave Acquires Weights & Biases Ahead of IPO

The Illusion of Competence: How AI Erodes Critical Thinking and What We Can Do About It

Directors’ Corner: Takeaways from MFDF Panel on AI and Portfolio Management

AI's Physical Leap: Humanoid Robots That Can See and Learn

A quick note:

I'll give weekly publishing schedule a try.

The pace of AI developments affecting board and business decisions has accelerated significantly. These weekly editions will be shorter and more focused, delivering the most essential insights without overwhelming your inbox.

Many of you have mentioned how these updates directly inform your strategic conversations, and this format ensures you have timely information when you need it: concise, actionable, and more frequent.

Thank you for your support and feedback. Please feel free to reach out any time.

1. Google Introduces AI Co-Scientist

Unlike tools that focus on literature review or data analysis, Google's new AI Co-Scientist generates novel hypotheses, designs experiments, and even engages in internal debates to refine ideas. Its ability to solve complex problems rapidly, such as the example reported on Forbes of it cracking a decade-old mystery in just two days, showcases its potential to accelerate scientific breakthroughs. It is currently limited to select researchers in biomedicine.

2. Microsoft/OpenAI Partnership Becomes Increasingly Non-Exclusive

In a recent podcast interview with Dwarkesh Patel, Microsoft CEO Satya Nadella emphasized the importance of AI inference over training. He highlighted that AI agents will exponentially increase compute usage, AI model training is commoditized, and “AGI” talk is nonsensical benchmark hacking. Nadella emphasized Microsoft's role in providing compute infrastructure for various AI workloads, indicating a possibly less exclusive relationship with OpenAI and a broader focus on serving multiple AI companies and models.

3. CoreWeave Acquires Weights & Biases Ahead of IPO

AI infrastructure provider CoreWeave is acquiring model development platform Weights & Biases in a reported $1.4 - 1.7B deal. This acquisition combines CoreWeave's GPU cloud infrastructure with W&B's AI development tools used by over 1 million engineers and creates an end-to-end AI development environment. CoreWeave, which recently filed for IPO, reported 700% revenue growth to $1.92B last year with $15B in pending contracts. However, CoreWeave has a capex-heavy business model hungry for capital to sustain the buildout pace. Its largest client Microsoft has recently talked about its strategy to reduce reliance on externally leased AI data center capacities after 2025. It remains to be seen if CoreWeave’s future growth is impacted.

The Illusion of Competence: How AI Erodes Critical Thinking and What We Can Do About It

The pace at which generative AI has infiltrated academic and professional environments is staggering. A recent UK study reveals that 92% of undergraduates are now using generative AI in some form, up from 66% last year, with 88% applying it directly to their assessments. This rapid adoption reflects a fundamental shift in how we work and learn - one that both educational institutions and employers are struggling to navigate.

The Critical Thinking Paradox

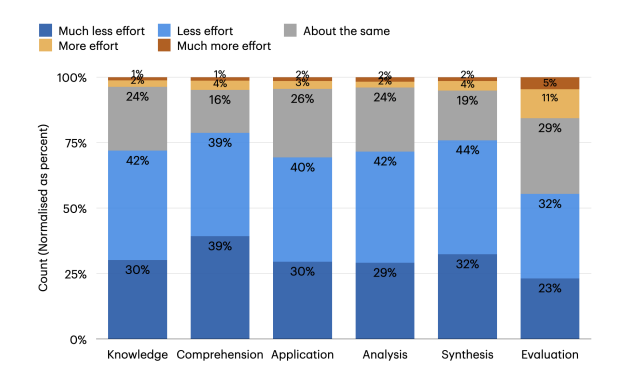

A study co-authored by Microsoft and Carnegie Mellon University researchers, published in February 2025, reveals several profound shifts in how AI is changing our cognitive processes. Their study of 319 knowledge workers across diverse occupations identified three significant transformations in critical thinking:

From information gathering to verification: While AI dramatically reduces effort in retrieving and organizing information, workers now invest more cognitive effort in verifying AI outputs against external sources.

From problem-solving to response integration: GenAI excels at providing personalized solutions to problems, but users must develop new skills to effectively apply these solutions to their specific contexts.

From task execution to task stewardship: Knowledge work is shifting away from direct production toward a higher-level supervisory role - what researchers describe as "stewardship" rather than execution.

The researchers discovered a particularly telling correlation: higher confidence in AI is associated with less critical thinking, even as these tools make such thinking seem less effortful.

This creates what researchers termed "mechanized convergence": when users with access to AI tools produce less diverse outcomes for the same tasks compared to those without AI. This convergence reflects a lack of personalized, contextual judgment that can indicate deteriorating critical thinking skills over time.

The Entry-Level Skills Gap

However, these reductions in perceived effort may come at a long-term cost. As Bainbridge noted in her "Ironies of Automation" research, when technology handles routine tasks and leaves exceptions to humans, it deprives users of regular opportunities to practice and strengthen their cognitive abilities - potentially leaving them unprepared when more complex situations arise.

As AI increasingly handles tasks that once served as training grounds for early-career professionals, today's students may not have the same opportunities to develop fundamental skills through entry-level finance and other professional roles. Traditional learning pathways are being disrupted, creating an urgent need for new approaches to talent development.

This challenge is particularly acute in fields like finance, where junior analysts once learned by doing the data gathering and basic analysis that AI now accomplishes in seconds. How do we ensure early-career professionals develop the foundational skills needed for advancement when their traditional entry points are being automated?

What We Can Do About It

1. Reimagine Assessment Methods

Organizations need new approaches to evaluate talent in an AI-augmented world. Companies like NextByte are pioneering "vibe coding" assessments that evaluate candidates' ability to effectively direct AI rather than testing knowledge in isolation. Similar approaches could work across industries, focusing on:

Ability to verify and critically evaluate AI-generated information

Skill in guiding and iteratively improving AI responses

Capacity to apply domain expertise to enhance AI outputs

Judgment in knowing when to rely on AI and when human analysis is superior

2. Revamp Educational Frameworks

Universities must fundamentally rethink their approach to teaching in an AI-powered world. Forward-thinking educators are creating learning experiences that deliberately foster metacognitive awareness, helping students recognize when they're offloading thinking versus engaging deeply with material.

The most effective educational programs now incorporate verification strategies and source evaluation as core skills, teaching students not just to use AI, but to question and validate its outputs. This approach acknowledges that in a world of abundant information, discernment becomes more valuable than recall. Curriculum designers are increasingly focusing on the skills that remain uniquely human: ethical reasoning, contextual judgment, and strategic thinking that considers not just what can be done but what should be done.

3. Transform Organizational Practices

Leading organizations recognize that developing critical thinking alongside AI fluency requires intentional design. They're creating structured opportunities for early-career professionals to develop judgment through guided practice, rather than assuming these skills will emerge organically.

Mentorship has taken on new importance, with experienced professionals guiding newcomers not just in technical skills but in the nuanced decision-making that AI cannot replicate. The most effective training programs focus less on tool mastery and more on strategic application: teaching when to use AI, when to question it, and how to integrate its outputs into broader organizational contexts.

4. Cultivate Self-Awareness

The Microsoft study reveals a concerning psychological dimension to AI use: 52% of users are reluctant to admit using AI for important tasks, and 53% worry it makes them look replaceable. Breaking through this hesitation requires creating environments where transparency about AI use is normalized and valued. Organizations that handle this well establish psychological safety by celebrating examples where human judgment successfully enhanced or corrected AI outputs.

Leading teams explicitly value and reward the uniquely human contributions that complement AI capabilities, including creativity, empathy, ethical reasoning, and contextual judgment. They encourage regular reflection on how AI tools shape thinking processes, sometimes through structured exercises where team members compare their approaches to the same problem with and without AI assistance.

The real long-term value will come not from using AI to do the same work faster, but from fundamentally elevating work quality, creativity, and strategic impact. The most successful individuals and organizations will be those who develop the critical thinking skills needed to use AI not just as a productivity tool, but as a catalyst for higher-order thinking and more valuable work.

Takeaways from MFDF Panel on AI and Portfolio Management

MFDF is the premier organization supporting independent directors of mutual funds, ETFs, and other registered investment companies through education, research, and networking opportunities. I participating in an MFDF panel last week discussing AI and portfolio management (recording is available for members), and came away with some observations worth sharing.

27% of Fund Board Trustees Said AI is Used in Investments, At Half the Level in Industry Surveys

When we asked the audience of fund board trustees directly if the funds they oversee use AI in portfolio management, only 27% said yes, dramatically lower than the mid-50%’s reported in industry surveys.

My conversations with trustees and portfolio managers make me think that the gap stems from how a question such as "Is AI being used in making investments?" is interpreted. Many advisers narrowly respond with whether AI systems are making final investment decisions, while trustees are thinking about the entire investment processes. As a result, board leaders often don't realize how AI is already embedded in their operations. AI “quietly” powers data cleaning, insights generation, and research tools without being labeled as such. This "invisible AI" creates significant governance blind spots for boards trying to provide proper oversight.

Hallucinations: Mitigation and Benefits

We hold AI to much higher standards than human analysts, who often make mistakes. One key element is the well known hallucination tendencies of AI systems. How do we mitigate AI hallucinations in investment processes? Three practical approaches stood out from our discussion:

Training AI to say "I don't know" when uncertain rather than making things up

Grounding AI responses in trusted data sources using techniques like RAG

Using reasoning models that show their work step-by-step, making it easier to audit their process and quality

In AI-first investment processes, a firm could adopt a pragmatic approach where AI do most the analysis and portfolio management, and a human validates the portfolio before implementation.

However, we have to recognize that some hallucinations are actually desirable. Contrarian investment theses - often where the greatest returns come from - require tolerance for being wrong. By demanding AI be flawless, we might paradoxically limit its ability to generate the creative, non-consensus views that drive outperformance.

What Fund Boards Should Focus On in the 15c Process

Integrating AI oversight into the 15c process was a major focus of our discussion. Several boards are now incorporating specific AI questionnaires as part of their due diligence.

Rather than getting lost in technical details, effective board oversight focuses on:

Documentation and recordkeeping: Can the firm produce records showing why specific AI-driven investment decisions were made?

Testing methodologies: What evidence shows the AI models perform as expected across different market conditions?

Team expertise: Does the investment team implementing AI have the right skill set to understand the technology they're using?

Compliance program updates: Has the compliance function been modified to address unique AI risks?

As one panelist noted, "It's great having fancy AI tools, but if you can't explain to regulators why your portfolio contains what it does, you're asking for trouble." This reinforces that the 15c process needs to evolve alongside the technology.

Democratization of AI Access

One piece of encouraging news emerged for smaller firms concerned about competing with deep-pocketed players: AI costs are plummeting dramatically.

The same AI models that were prohibitively expensive just last year now cost about 1/20th of their previous price. This trend means it's not just large firms who can afford thinking about incorporating AI in their process. That's really positive news for smaller ones.

The investment industry stands at an inflection point similar to the passive investing revolution of the 1990s. Those who understand how to thoughtfully integrate AI capabilities while maintaining proper governance will likely thrive, while those who either ignore it or implement without adequate controls may find themselves at a significant disadvantage.

AI's Physical Leap: Humanoid Robots That Can See and Learn

Robotics has long been used in manufacturing, especially in Japan and China, where tasks are well-defined and improvements have focused on hardware performance and cost. AI-powered robots, however, face a big challenge: unlike large language models trained on vast internet text, we lack sufficient labeled 3D data to teach AI how to navigate and interact with the physical world.

Fortunately, brilliant researchers and innovators are making remarkable progress. The latest breakthroughs focus on self-learning, observational skills, and real-time responsiveness. Figure AI's recent Helix announcement represents a significant leap forward - it's a Vision-Language-Action (VLA) model that creates a unified system for perception, language understanding, and control.

What makes Helix revolutionary is its ability to:

Control a humanoid's entire upper body, including individual fingers

Enable collaboration between multiple robots on shared tasks

Pick up virtually any household object, even ones never seen before

Run on a single neural network without task-specific fine-tuning

Operate entirely on onboard low-power GPUs, making it commercially viable today

Brett Adcock, CEO of Figure, shared his vision in an insightful interview recently on the Amit Kukreja Podcast. While some companies concentrate solely on AI software, Figure takes a different approach, vertically integrating hardware and software, believing this is essential to building the best humanoid robots.

Their approach: 🤖 NVIDIA GPUs power both training and onboard inference, enabling real-time decision-making. 🤖 Foundation models combine open-source, proprietary, and real-world data collected from their own robots. 🤖 Hardware-software synergy optimizes performance, ensuring AI is trained on well-designed hardware.

One key factor is scale. Companies that achieve high unit volumes, drive down costs, and collect vast real-world data will have a competitive edge. Scaling manufacturing is another challenge entirely, but unlike car production, robotics manufacturing could be less capital-intensive and more flexible. Robots are small enough for humans to handle during assembly, so processes can be customized without requiring full automation. This could become a winner-takes-most market with enormous long-term potential.

What applications of humanoid robots are you most excited about? Are you, like me, looking forward to robots as capable and empathetic caregivers?

Thank You

If you’re finding this newsletter valuable, please share it with a friend, and consider subscribing if you haven’t already. I greatly appreciate it.

Sincerely,

Joyce 👋

You touch upon so many really salient points in your issues.