#27 DeepSeek's Rise: What Directors Are Asking

Plus: AI Makes Its Mark on Research, Reading Recommendation on Future of Corporate Finance, Cursor Reached $100m in Revenue, Tech Job Cuts, and More

Dear Readers,

In this issue, I cover:

Notable Developments:

Cursor Reached $100m in Revenue in 12 Months

Tech Companies Make Further Job Cuts to Invest In AI

The $500bn Stargate Project Faces Skepticism

Alexa Might Finally Get Smarter

Directors’ Corner: DeepSeek's Rise: What Directors Are Asking

AI Makes Its Mark on Research

The Future of Corporate Finance [Reading Recommendation]

Enjoy. All past issues can be viewed here.

As I am sending this newsletter right before the big game, GO BIRDS 💚🦅💚! (And please brace yourself for some cringe-worthy AI ads. )

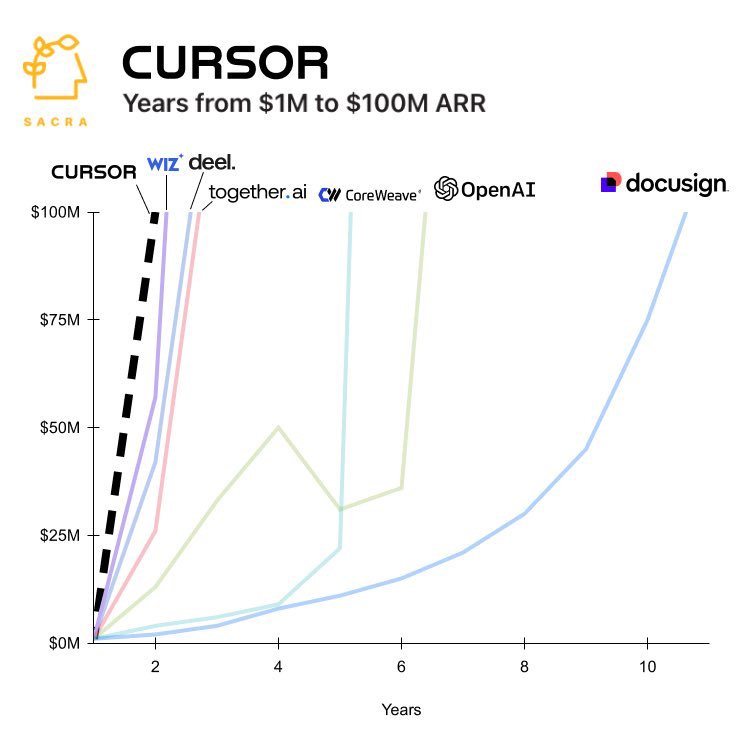

1. AI Coding Assistant Cursor Reached $100m in Revenue in 12 Months

Cursor, the AI-powered coding assistant I have recommended a few times in the past, achieved a huge milestone by reaching $100 million in annual recurring revenue (ARR) in just 12 months, faster than any SaaS company before. This growth, representing a staggering 9,900% year-over-year increase from $1 million in 2023, was driven by its adoption among over 360,000 developers paying $20-$40 monthly. Cursor's success highlights the transformative potential of AI applications that deliver clear ROI by enhancing productivity.

2. Tech Companies Make Further Job Cuts to Invest In AI

Tech companies are framing their human workforce restructuring in direct link to their AI investments. Salesforce's CEO Marc Benioff suggested the company might not hire any software engineers in 2025, while simultaneously announcing 1,000 layoffs (1.5% of total). Workday came up with plans to reduce their team by 1,750 positions (8.5% of total), and Meta's Mark Zuckerberg spoke openly about AI replacing mid-level engineers this year as the company prepares to cut 5% of its workforce.

The pace of this disruption to white-collar worker is concerning. Unlike previous technological shifts that allowed for gradual adaptation, today's workers are facing rapid displacement without adequate time or resources to pivot their careers. For business leaders, this is a critical moment to demonstrate responsible AI adoption by allocating resources to reskilling programs and helping people in transition. The future of work doesn't have to be a zero-sum game between AI and human talent. Let's make sure it isn't.

3. The $500bn Stargate Project Faces Skepticism

The Stargate Project is a massive $500 billion AI initiative announced in January 2025, aimed at building advanced AI infrastructure across the United States. It is a collaboration between major tech players, including OpenAI, SoftBank, Oracle, and MGX, with SoftBank leading the financial effort and OpenAI overseeing operations.

The initiative has been met with skepticism regarding its funding readiness, particularly given the ambitious scale of the investment and SoftBank's planned $40 billion stake in OpenAI. Concerns have also been raised about the feasibility of securing sufficient land and energy resources for such large-scale data center construction. Additionally, the project's exclusive focus on OpenAI as its primary beneficiary has drawn criticism for potentially limiting broader industry collaboration.

SoftBank CEO Masayoshi Son’s reputation for grandiose visions with inconsistent execution has further fueled doubts about the project’s viability. Microsoft’s shift away from being OpenAI’s exclusive computing partner is expected but still interesting to watch.

4. Alexa Might Finally Get Smarter

Amazon is finally turning to Anthropic's Claude to bring Alexa into the modern AI era, after two years of unsuccessful in-house development. The release is planned for late February. With over 100 million active Alexa users and more than 500 million Alexa-enabled devices currently being used mainly for basic tasks like alarms and weather reports, let’s see if Alexa can finally get smarter.

DeepSeek's Rise: What Directors Are Asking

DeepSeek, the Chinese open-source AI model, has captured attention by matching the performance of leading models like OpenAI's o1 at a significantly lower cost, sparking volatility in AI stocks and heated debate about the future of AI development.

In just weeks after its release, developers have created thousands of variations of AI reasoning models using DeepSeek's open-source approach. You can read a technical deep dive by SemiAnalysis here.

Yet its rise raises critical questions about privacy, security, and the commoditization of AI models that have cost hundreds of millions to billions to train. For board directors tasked with guiding strategy, governance, and risk, these developments demand immediate attention.

Here are some common questions I've received, along with my perspective:

Q: What makes DeepSeek such a big deal?

DeepSeek is a breakthrough in large language models. Its mixture-of-experts architecture and optimized distillation techniques drastically reduced training expenses while maintaining high-quality reasoning capabilities. Its all-in training cost may be higher than $6m as reported, but the industry experts agree that it is at least 40% cheaper than what OpenAI did with o1.

Moreover, DeepSeek’s open-source nature accelerates innovation globally. As it comes with open weights, research paper detailing its approach, and the most open MIT license, developers can modify and deploy the model freely, fostering rapid advancements across industries globally. Wenfeng Liang, DeepSeek’s CEO, emphasized that their goal is not merely commercialization but contributing foundational AGI research to the global community. We can expect that the next generation of AI models can be built more efficiently on costs and energy now vs prior to DeepSeek.

Q: How will DeepSeek impact pricing in the AI industry?

DeepSeek has redefined the economics of AI by offering services at a fraction of the cost charged by OpenAI. For instance, processing one million tokens with DeepSeek costs just $0.55, compared to $10 or more for similar models from competitors. Since DeepSeek, we have seen a recalibration of pricing strategies across the industry fairly quickly. The immediate result is that AI services are becoming more accessible to smaller enterprises and startups, democratizing innovation.

Q: Will this lead to further commoditization of AI large language models?

Yes. AI models outside of the top few face big challenges in gaining user adoption and competing on token cost. Their existing asset value might face write-down pressure while this might dampen Venture Capital’s appetite to provide further funding outside of known founders. Instead, value is shifting toward specialized applications tailored to specific industries or use cases. For example, while DeepSeek’s open weights make it accessible to all, enterprises could be looking for customized solutions that integrate proprietary data and align with their unique needs, hosted on their private cloud with security guardrails.

Q: Should we be concerned about privacy and security deficiencies of DeepSeek?

Yes, with a twist however. With its servers located in China, DeepSeek provides carefully drafted answers to politically sensitive topics, implying censorship on its outputs. Its user agreement leaves many to question its data retention policies, including privacy inquiries in Europe. It has faced cyber attacks and algorithmic jailbreaking vulnerabilities.

However, organizations can sidestep these issues by hosting safer versions of DeepSeek on private data center servers or on secure platforms like Amazon AWS or Microsoft Azure. These vendors offer enhanced safeguards that comply with privacy laws and regulatory standards.

This way, AI applications and users can take advantage of DeepSeek’s cost-effective AI capabilities with peace of mind. Amazon AWS, Groq, Hugging Face, Microsoft Azure, Databricks and Perplexity all provide their versions of DeepSeek to users.

Q: Does DeepSeek's compute and power efficiency mean lower capital spending on AI infrastructure (including NVIDIA chips)?

Not immediately. While DeepSeek's efficiency represents a notable jump in "compute multiplier", major players like Amazon and Meta have maintained or even increased their capital expenditure guidance for 2025. The spending focus is shifting toward inference (used when AI is answering users’ queries) rather than training as companies prepare for broader AI adoption.

Over time, these efficiency gains will likely "shift the curve", as described by Anthropic’s CEO Dario Amodei in this long blog, allowing organizations to achieve better results with the same investment. But rather than reducing spending, companies typically reinvest these gains into more advanced models and capabilities.

While usage will grow, the intensity of individual applications—measured by computational demands—may decline as more efficient models become standard. This shift could alleviate some concerns about the environmental impact of AI while broadening its societal reach.

Q: If DeepSeek disappears tomorrow, will things go back to where they were pre-DeepSeek?

No. The release of DeepSeek has permanently altered the AI landscape by successfully challenging the narratives of (1) AI scale laws; (2) Closed models’ superiority in performance built on best resources of talent and compute; (3) The compute and energy demand by AI is not satisfiable without unprecedented buildout. In other words, even if DeepSeek disappears tomorrow, it has completed its historical mission by its breakthrough contribution to open source AI development.

Somewhat ironically, OpenAI CEO Sam Altman recently admitted that keeping OpenAI closed-source might have been “on the wrong side of history.”

Additional Strategic Considerations for Boards

Here are additional strategic considerations for boards:

1. Cost Optimization: Evaluate how declining AI costs can impact your organization’s budget allocation and return on investment (ROI) expectations.

2. Open-Source Opportunities: Explore how leveraging open-source models like DeepSeek (or its safer derivatives) can accelerate innovation.

3. Responsible Implementation: Lead discussions on guardrails, ethical AI deployment to safeguard against bias, misuse, or data breaches.

AI Makes Its Mark on Research

Suddenly, research assistants are at our fingertips. Google's Gemini with Deep Research scours thousands of websites to compile reports. DeepSeek delivers reasoning without complex prompts. Now OpenAI's Deep Research (ODR) generates detailed industry analyses in minutes that once took analysts days.

When tasked with a SaaS industry analysis, ODR produced a 50-page report in 30 minutes, covering competition, pricing trends, and profitability metrics. The quality nears sell-side research I reviewed as an equity investor. Its ability to synthesize logical arguments and connecting data points into coherent narratives stands out.

Yet limitations persist. Citations in longer reports can be unreliable, and outputs lack differentiated viewpoints or compelling storytelling. Still, the potential is clear: imagine augmenting these tools with proprietary data, research archives, management interviews, exclusive datasets. AI could absorb institutional knowledge while handling routine tasks.

Case Study: Management Quality Analysis

The implications stretch further. Recently, a fundamental equity investor posed a question: How do we truly assess management quality? Traditional metrics like quarterly targets often miss the long-term impact of bold moves. When Amazon bet on cloud computing or Netflix launched ad-tier subscriptions, conventional analyses might have labeled these risks.

Here, I believe AI’s reasoning capabilities offer new perspectives. Reinforcement learning (RL) models can simulate how strategic decisions play out over years, testing thousands of scenarios. But integrating AI into management assessment has been slow. Seasoned investors’ intuition resists codification and data scarcity complicates training, as pivotal decisions (e.g., major acquisitions) are rare. Simulation complexity compounds the challenge, as outcomes intertwine with market shifts and competition.

The path forward likely blends methods: supervised learning for baseline analysis, RL for long-term insights, and human judgment for context and ethics. Investment teams could systematize management assessments, generate synthetic training data for rare scenarios, and layer domain expertise onto open-source frameworks.

Research Teams Shrink as AI Drives 10x Gains

This shift has profound implications. If AI drafts 95% of IPO prospectuses, research reports may follow. The edge will lie in the 5% human insight that anticipates trends. Research teams in investing, consulting, and business intelligence may shrink, focusing talent on asking great questions and spotting hidden patterns. Putting smart people in a room debating on strategic trade-offs and scenarios while leaving the preparation of data and presentation to AI. Many researchers may face identity crisis if they don’t adapt and have clarity on what their unique values are.

For firms like McKinsey and Gartner, this threatens value propositions. Success hinges on cultivating the human-AI symbiosis: where machines handle the grind, and minds chase the extraordinary.

The Future of Corporate Finance [Reading Recommendation]

AI will eventually automate almost everything in the accounting and finance functions. But some things resist automation. Not because the tech can’t do it (or at least try), but because people won’t let it.

High-stakes judgment calls: AI will support modeling the scenarios, but someone still has to sign off. As a CFO whose job and reputation are on the line, I’d be hard-pressed to hand over high stakes decision agency to AI.

Accountability & ownership: You can’t fire (or shame) a bot… yet

Relationships: The board doesn’t want a chatbot pitching the next acquisition. Investors will want to talk to management, not a computer.

That’s a short list. Everything else? It’s on the chopping block.

The above comes from one of my favorite newsletters: CFO Secrets. Read more in the first piece of the series here and follow along.

Many finance leaders are wrestling with this existential question of AI's impact on their profession. The future seems to be arriving faster than expected, ready or not. For those interested in exploring these themes further, I'll be speaking on two panel discussions on this topic this week (here and here). If you're in the Bay Area, I'd love to see you there and continue this important conversation in person.

If you’re finding this newsletter valuable, share it with a friend, and consider subscribing if you haven’t already. I greatly appreciate it.

Sincerely,

Joyce 👋