#15 AI's Energy Impact: Challenges and Opportunities

Plus: Databricks shines, Zoom's digital twin vision, AI and trust, AI's use in Boardrooms, and more

👋 Welcome back to "AI Simplified for Leaders," your bi-weekly digest aimed at making sense of artificial intelligence for business leaders, board members, and investors. I've realized that while I love learning at conferences and events, they take time away from reading and thinking for this newsletter. So I've moved to a bi-weekly publishing schedule. I hope this new cadence also suits your summer pace.

In this issue, I cover:

Notable news: Databricks shines, AI funding continues to boom, Apple Intelligence, Zoom’s digital twin vision, Humane AI Pin pricks

AI and trust

AI’s energy impact: opportunities and challenges

Use case spotlight: audit firms

Director’s corner: AI’s use in boardrooms

One more thing: why is it so hard to close enterprise deals in AI?

Enjoy.

Notable AI News

Databricks Surges Ahead Thanks to AI

It's interesting to see Snowflake and Databricks, two data leaders in the enterprise space, hold their marquee events back-to-back in San Francisco. At its conference, Databricks disclosed its growth rate accelerated to 60% year-over-year in the past six months, reaching $2.4 billion (annualized). In comparison, Snowflake management guided for 24% growth in its product revenue to around $3.3 billion in the current fiscal year.

Databricks is showing faster gains in enterprise AI revenues compared to peers. Its technology was built with AI/ML workloads as one of the key use cases from the beginning, positioning it well for the acceleration in AI adoption. Its recent product rollouts and acquisitions cater to key customer needs: managing data across various structures and formats, avoiding vendor lock-in, accessing economical compute resources, fine-tuning and owning AI models, and implementing robust data privacy and security for AI applications.

As a private company, Databricks has been able to run an aggressive cost structure, investing more as a percentage of revenue in research and development (R&D) than Snowflake. R&D spending as a percentage of revenue was 33% in each of the past three fiscal years, compared to 19% for its peer group. Snowflake may need to sacrifice margins and free cash flow to catch up from its past underinvestment in AI, or risk market share losses.

AI Fundings Continue to Boom

Text-to-video AI generation tool Pika Labs raised another $80M at an estimated valuation of $700m. Six months ago the company raised $55m at a valuation of $200m, implying tripling valuation within that period. The extent of the valuation bump was surprising as since last round competition has arguably worsened with OpenAI’s Sora (yet to be rolled out) showing impressive performance and competitors such as Runway and Kling AI are keeping pace. Unique value proposition and prospects for market share gain at Pika Labs are unclear and the intensity of capital needs remains a challenge. The team is impressive nevertheless.

On the usual big-tech-funding-AI-model-startup front, enterprise-focused AI model startup Canadian startup Cohere raised $450m from investors including NVIDIA, Salesforce Ventures and Cisco at a $5bn valuation. Its annualized revenue as of March was $35m, although set to pick up with more enterprise spending. French startup Mistral raised $650m at $6.2bn valuation, with Microsoft, NVIDIA, Salesforce, Samsung and IBM among its investors. I wonder if much of the raised capital will eventually end up in NVIDIA’s cash pile 💸 💸💸.

Apple Differentiates with On-Device and Private AI

Apple recently unveiled Apple Intelligence. It combines generative models with personal context to deliver helpful and relevant intelligence while maintaining user privacy. Key features include enhanced natural language capabilities for Siri, systemwide Writing Tools for rewriting, proofreading, and summarizing text, and prioritization of messages and notifications. Apple Intelligence also enables on-device image generation, custom emoji creation, and audio transcription and summarization in the Notes and Phone apps. It also brings existential threats to AI apps in these consumer-oriented areas.

Zoom CEO’s New Vision: Send Your Digital Twin to Meetings

In a recent trend of AI-driven reframing, companies with popular products are repositioning themselves beyond their core offerings. Squarespace, known for website building tools, is now focusing on back-office operations. Zoom, famous for video conferencing, hopes to be seen as a comprehensive workspace application platform.

Zoom's founder and CEO, Eric Yuan, has an ambitious vision for the future of work. He believes AI-powered "digital twins" will soon attend meetings, respond to emails, and handle phone calls on our behalf. I'm skeptical about what this vision implies for our personal identities, growth over time, and the natural ambiguity in our decision-making process. Not to mention the uncertainties in technical capabilities and risk oversight.

Humane AI Pin Pricks

AI wearables have a difficult time to convince people of their meanings of existence. Weeks after the delivery of Humane AI pins to the hands of early adopters, negative reviews started rolling in and the company management are seeking buyers of the startup at a valuation of $750 million to $1bn. The startup has raised $230 million in funding with a valuation of $850 million as of last March. The outcome is not a surprise. The startup’s culture discouraged its employees from challenging its founders’ product decision unfortunately.

AI Adoption: How Can We Trust AI?

Trust, transparency, traceability, and explainability are indispensable for the successful adoption and deployment of AI technologies. How can we foster trust between humans and AI applications, and know what to believe in front of our eyes on the internet flooded with AI generated content? How can enterprises navigate this terrain to confidently adopt AI technologies while ensuring trust remains intact?

Preparation and Mitigation of AI Related Risks in the Election

With the 2024 U.S. presidential election approaching, there are serious concerns about the explosion of AI-generated disinformation and synthetic content that could significantly impact the race. AI tools can be used to create highly personalized content that misleads voters, automate the translation of malicious content into any language, and generate deepfakes - false or manipulated audio, video, or images.

Ultimately, preparing for AI's impact on elections is a shared responsibility that requires vigilance and adaptation from leaders, companies, and citizens. Some startups are adapting ideas from blockchains to create ‘deep-reals’, i.e., to authenticate content to ensure its reliability.

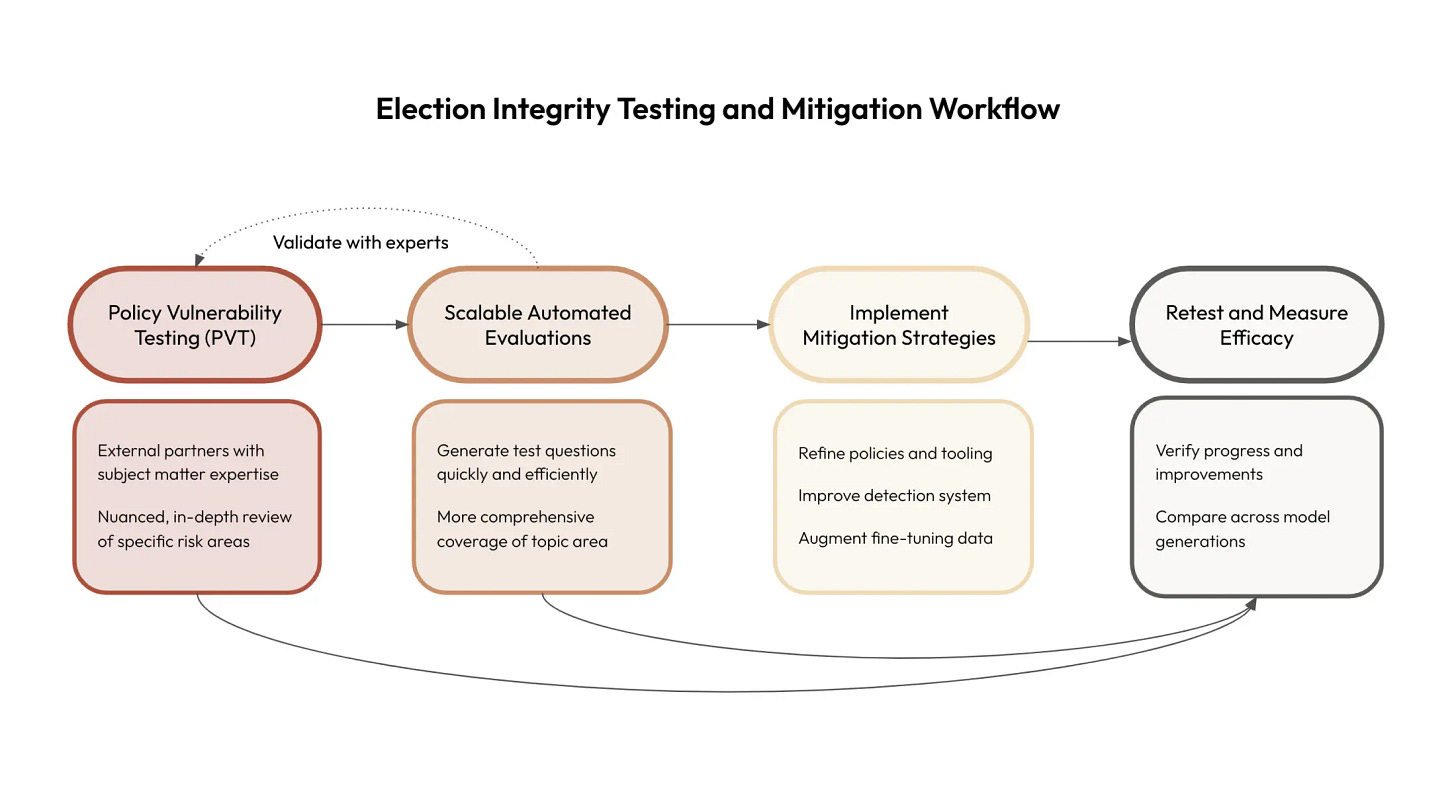

This blog post from Anthropic shows how the AI model company prepares for AI vulnerabilities and risks that might arise in the upcoming election. Anthropic has developed a process using expert testing (Policy Vulnerability Testing) and automated evaluations to identify and mitigate elections-related risks in their AI models. PVT involves in-depth testing by external experts to assess accuracy, bias, and potential misuse. Automated evaluations complement PVT by efficiently testing model behavior at scale. Findings inform risk mitigations such as updating system prompts, fine-tuning models, refining policies, and redirecting users to authoritative sources. The testing methods also measure the efficacy of interventions.

Key Considerations for Enterprises

Building trust in AI within enterprises is a multifaceted challenge that requires a comprehensive approach. While we don't have all the answers, several important considerations and drivers are important:

Use Case-Based Evaluation and Audit: Enterprises need to move beyond generic AI assessments and conduct thorough evaluations based on specific use cases. This involves rigorously testing AI systems in real-world scenarios relevant to the business, assessing not only performance but also potential biases, safety issues, and ethical implications.

Transparency and Explainability: AI systems should not be black boxes. Efforts must be made to develop and adopt AI models that provide clear explanations for their decisions. This is crucial for building trust among users and stakeholders.

Human-in-the-Loop Processes: Implementing workflows where human experts oversee and validate AI outputs can help catch errors and build confidence in the system's reliability.

Continuous Monitoring and Improvement: AI models can drift or behave unexpectedly over time. Continuous monitoring, regular audits, and iterative improvements are necessary to maintain trust.

Robust Data Governance: Implementing strong data governance practices is essential for trustworthy AI. This involves defining proper data governance framework that clearly outline data ownership, usage rights, privacy considerations, and the ethical boundaries for data utilization. By establishing transparent data lineage and enforcing strict data quality standards, enterprises can ensure that their AI systems are built on a foundation of well-governed, high-integrity data.

Trust in AI isn't built overnight; it requires sustained effort and investment. While universal solutions remain elusive, enterprises and their vendors that proactively address these considerations are more likely to develop AI systems that earn and maintain the trust of their stakeholders. iappai published its “AI Governance in Practice” report that provides a comprehensive summary of resources.

AI's Energy Impact: Challenges and Opportunities

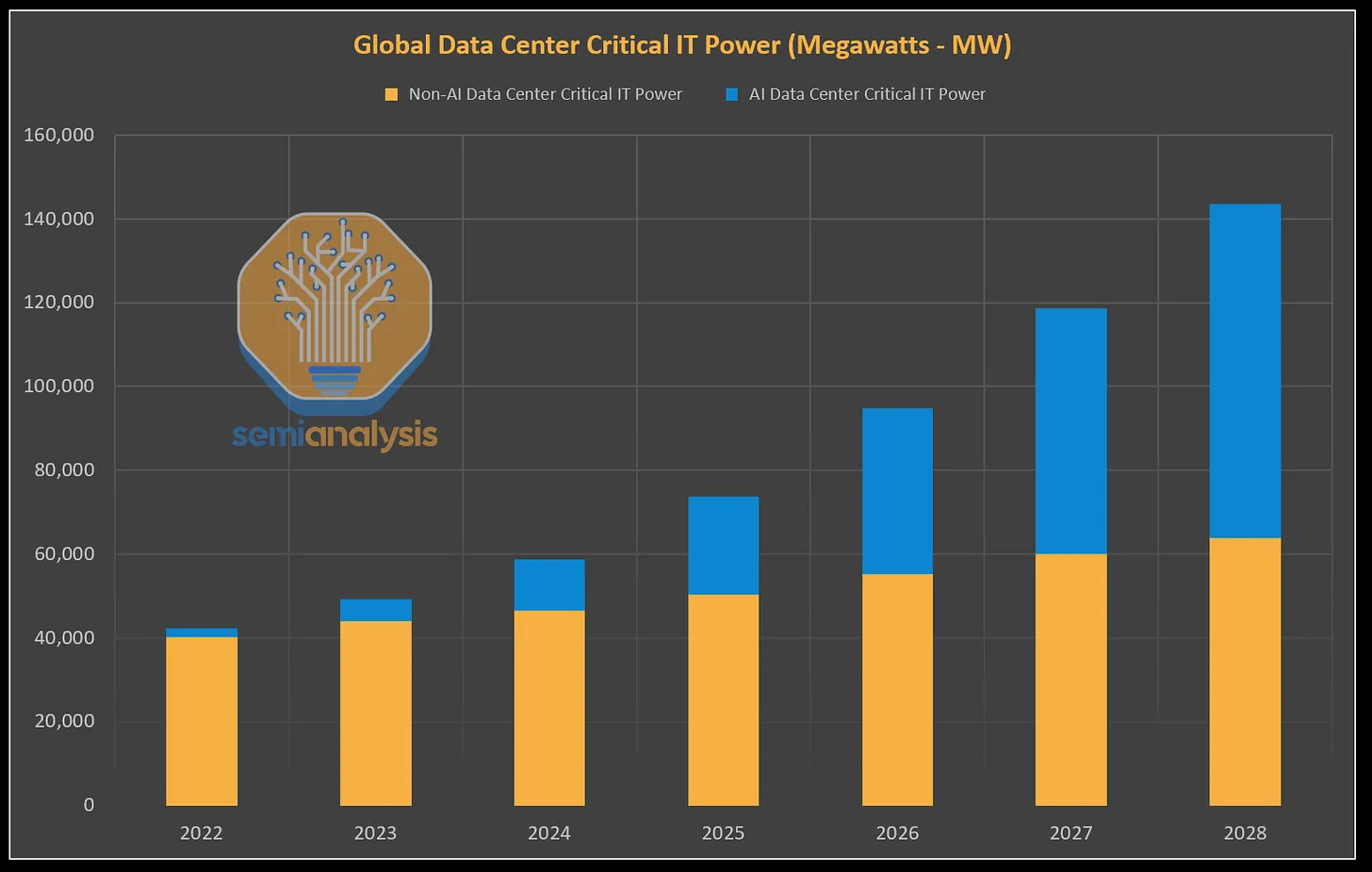

Dire headlines have sounded the alarm - Microsoft reporting a 29.1% spike in CO2 emissions largely due to AI growth, with Goldman Sachs predicting a staggering 160% increase in power demanded by data centers to support AI. It's prompting intense boardroom debates over prioritizing AI versus sustainability commitments.

However, oversimplifying the narrative as "AI = unsustainable" overlooks the complex, nuanced reality. While AI's voracious appetite for compute does present legitimate energy challenges, there are also promising advancements that could mitigate - and even reverse - its environmental toll.

Data Centers: Specialized for AI Workloads

NVIDIA prioritizes power efficiency in its AI GPU chips. Through the combination of GPUs and CPUs, NVIDIA can deliver up to a 100x speedup while only increasing power consumption by a factor of three, achieving 25x more performance per Watt over CPUs alone." (Source: NVIDIA).

“Accelerated computing is sustainable computing.” - Jensen Huang, NVIDIA’s CEO

Data centers specialized for the unique computational workloads of training large AI models can be significantly more energy efficient than traditional data centers. AI workloads don't require the same ultra-low latency, allowing for power load optimization. Combined with advanced cooling systems and strategic placement near renewable sources, these AI-centric data centers reduce emissions.

AI Model Efficiency: Small Can Be Beautiful

The AI models themselves are also becoming leaner and greener. Startups like Cohere are pioneering compact models tailored for enterprise use cases that use just 20% of the resources of larger, generic models. New architectures like sparse transformers and mixture-of-experts could further boost efficiency. Apple’s recent product announcements point to on-device AI as one of the possibilities.

Innovation in Energy Steps up to the Challenge

Perhaps equally promisingly, AI's soaring strategic importance is unlocking billions in investment for innovative energy solutions previously deemed too costly. Once we recognize that data centers built for AI model training do not have to prioritize response latency and proximity to customers, we could utilize previously wasted, stranded or hard-to-access energy sources. An innovator in this space, Crusoe Energy does just that – their solution captures wasted natural gas from oil and gas operations that would otherwise be flared, and uses it to power modular data centers (Source: Crusoe Energy).

AI Helps Optimize Energy Consumption

An optimistic analysis by PwC suggests that the proliferation of AI has the potential to reduce global greenhouse gas emissions by around 4.0% by 2030, an amount equivalent to 2.4 Gt CO2e – equivalent to the 2030 annual emissions of Australia, Canada, and Japan combined.

Across industries, people have already started using AI to optimize energy usage, and the potential is significant. For example, Doosan Heavy Industries used AI to optimize wind farm operations, boosting efficiency and reducing maintenance costs by 15%.

While AI's growth admittedly exacerbates energy strain in the near-term, framing its long-term impact as simply unsustainable ignores the incredible innovations underway to neutralize and potentially reverse this toll. As leaders evaluate tough trade-offs, understanding this nuanced reality is crucial for informed decision-making.

In my LinkedIn article, I explore these AI energy opportunities in-depth, diving into the latest advancements in data centers, model optimization, energy innovation, and AI's efficiency applications. I invite you to take a read and share your feedback and insights. I welcome pushbacks!

AI Use Case Spotlight: Audit Firms Embrace GenAI Audit Assistants

Readers in corporate finance or on audit committees might appreciate this use case and its potential to accelerate quarter-end book closes and audit cycles.

KPMG has unveiled major upgrades to its AI-powered Audit Chat tool and Clara smart audit platform. The upgrades enable auditors to record meetings, automatically create process maps, conduct enhanced gap analyses against standards, extract key contract terms, and assess financial disclosures against peers - all powered by AI.

Since launching Audit Chat last October, KPMG's auditors have engaged in over 600,000 AI-assisted conversations to search databases, analyze documents, and research complex topics. A mobile app will further integrate these AI capabilities across devices.

According to Scott Flynn, KPMG's U.S. Vice Chair of Audit:

"Generative AI is revolutionizing our ability to uncover audit insights, while creating a better experience for our people, audit committees and management teams."

Other major audit firms such as Deloitte, EY, and PwC are making similar AI investments. Firms are focusing on upskilling their auditors and fostering a human-centered approach when it comes to AI adoption. As discussed in this newsletter in the past, AI integration could provide a solution to the wide-spread talent shortage in accounting talents and make this profession more appealing to young professionals.

Directors’ Corner: Use of AI in Boardrooms

International Holdings Company, a publicly listed company in UAE, appointed an AI persona ‘Aiden Insight’ to its board as an AI observer. The intention is to use Aiden Insight to provide real-time insights to inform and assist decision making discussions in the boardroom. However, I am not convinced that real-time insights such as market conditions or contract booking shortfalls will lead to better decisions by the boards. Instead, it might distract the board with operational decisions that should be made by management.

I also do not believe using AI to take notes or summarize board meetings is a valuable use in the boardroom. It takes experience and wisdom to understand dynamics and nuances during board discussions and translate the key discussion points and conclusions into meeting minutes. Hallucinations and misinterpretations can lead to serious consequences. There is little gain from the AI productivity here.

Instead, I am more interested in responsible and safe AI used to identify behavioral patterns or unconscious biases and suggest ways to improve the effectiveness of the board. AI can also provide suggestions on key scenarios or issues amiss from the board discussions in the end of board meetings.

One More Thing:

Why is It So Hard to Close Enterprise Deals in AI?

According to Matt Dixon, one of the world’s foremost experts in sales and the author of The Challenger Sale, most enterprise deals are lost to “no decision” rather than to competition. Contrary to common belief, customers’ fear of messing up (FOMU) outweighs their fear of missing out on benefits (FOMO).

I've observed lately that the boom in AI applications seems to make the 'no decision' challenge worse for both vertical SaaS incumbents and B2B AI startups. A few SaaS companies reported customer hesitation in contract renewals while startups in the same place found it difficult to move enterprise customers from trials to contracts.

Here are a few fears that might have driven customer indecision:

Fear of making a decision to renew with incumbent while their competitiveness might be challenged by cheaper AI-first solutions

Fear of entering a commitment with a new player with a better solution from another new player emerges shortly after the purchase, leading to buyers' remorse

Fear of not fully understanding the fast evolving competitive landscape and potential risks underlying these AI systems

Fear of uncertainty of predicting ROI and then not realizing the expected ROI, which can lead to reputational damage

Fear of implementation workload and risk

When pushed to do 'something' by executives, many at this stage would go with general-purpose tier-1 AI and data solutions, benefiting general-purpose AI players and hurting specialists and startups. Is this the 'no-one-gets-fired-from-buying-IBM' of our time, or only a temporary phenomenon at the early stage of AI adoption?

Have a great week.

Joyce Li